Most teams can spot the smoke. They see rising ticket volume, a CSAT dip, maybe a few tense emails from a big account. Then the meeting ends and the queue keeps moving. Insight didn’t turn into action. Not because you lack data, but because the signal isn’t packaged in a way your team can route, prioritize, and prove.

Here’s the uncomfortable part. Sampling and score-watching aren’t saving renewals. They create plausible stories with soft edges. You need ticket-level churn signals, driver mappings, and evidence you can paste into an email or a Jira ticket. When every metric links back to the exact quote, stakeholders stop debating and start deciding.

Key Takeaways:

- Treat churn signals as ticket-level fields with traceable quotes, not just scores

- Define owners, SLOs, and handoffs by risk tier so action happens within 24 to 48 hours

- Use drivers as the bridge from messy tags to leadership-ready narratives

- Quantify the cost of delays (volume × severity × ARR) to prioritize fixes

- Run a 6-step workflow weekly to move from signal to outreach to product remediation

- Measure impact on retention, recurrence, and effort to close the loop

Want to see the mechanics behind this approach? See How Revelir AI Works.

Why Churn Signals Stall Between Insight And Action

Churn signals stall when teams mistake high-level scores for actionable proof. Scores flag a trend; they don’t reveal drivers or quotes you can act on today. The fix is ticket-level fields, churn risk, effort, and drivers, plus traceability that collapses debate in the room.

The metrics that matter, and why scores alone mislead

Scores point to smoke, not the fire. A dip in sentiment tells you something’s off, but not where to intervene first, or which accounts to call today. What you actually need are explicit fields you can filter by: churn risk Yes/No, high or low effort, canonical tags, and drivers that roll messy signals into leadership language.

Drivers are the translation layer. They take a sprawl of raw tags and group them into “Billing,” “Account Access,” or “Onboarding,” so your weekly report doesn’t read like a tag salad. Then traceability does the heavy lifting. If every insight links to real transcripts, your CSM can lift a quote into outreach, and your PM can validate root cause without reading 200 tickets. That’s the difference between plausible and provable.

Most dashboards stop at sentiment. They can’t answer: “Which driver is generating the most high-effort, churn-risk conversations among enterprise accounts this month?” With ticket-level metrics and drivers, it’s a two-minute slice. If you want broader context, resources like Nextiva’s customer retention guide reinforce the same principle: precision beats platitudes when retention’s on the line.

Why conventional wisdom fails in the queue

It’s usually sampling and CSAT. You read 50 tickets “to get a feel,” watch a weekly score, and call it insight. Same thing with anecdote-driven triage after a spike, whoever has the loudest example wins. Meanwhile, quiet but compounding friction (like login loops) keeps showing up in the queue and creeping into renewals.

Full-population analysis with traceability changes the equation. When 100% of conversations are processed, you can pivot by driver, segment, and product area without the bias or lag of hand-reading. And because each aggregate number clicks into real examples, nobody’s asking “show me where this came from.” They can see it, and act, without breaking stride.

Filtering by churn risk Yes and high effort shouldn’t require a week of exports. It should take seconds and lead you straight to the transcripts. That’s how you go from “sentiment is falling” to “20% of high-risk tickets came from billing-fee confusion; here are the three quotes product needs.”

The Real Bottleneck Is Operational, Not Detection Accuracy

The real bottleneck isn’t detecting churn signals; it’s owning the response. Detection is table stakes. What breaks is routing, SLOs, and evidence transfer to product. Until you define owners, timelines, and the handoff package, insight just creates another meeting.

What traditional approaches miss about ownership

Most teams can label churn risk. Fewer have a crisp answer to “Who touches this ticket next, with what message, by when?” Ownership blurs at the seams between Support, CSM, and Product. That’s where you lose days. You don’t need perfect models; you need predictable motion.

Start by assigning owners by risk tier and defining SLOs by severity. Tier 1 (high effort, churn risk Yes, high ARR): CSM outreach within 24 hours, Support confirms categorization and attaches a transcript excerpt, Product reviews patterns weekly. Tier 2: 48 hours with lighter touch. Tier 3: monitor and aggregate. It’s the motion that matters, consistent, visible, and documented.

When exceptions happen, and they will, make them explicit. If volume spikes beyond capacity, throttle attention to top accounts by ARR. If a driver crosses a threshold (say, >15% of negative sentiment for two weeks), product remediation kicks in even if the queue is busy. Ownership becomes a playbook, not a tug-of-war.

Who should do what, and when?

Routing should reflect the metric and the driver. High-risk billing issues? CSMs own outreach with a pricing-clarification template. Account access failures? Support leads immediate unblock while CSM sets expectations. Repeating technical instability? PMs own the weekly rollup and remediation brief.

Time is the lever. SLOs tie urgency to value: 24 hours for Tier 1, 48 hours for Tier 2. The content is standardized: a short opening acknowledgment, the exact friction cited, the next step, and a timeline. Add a transcript excerpt to cut the back-and-forth. Nobody should be guessing about tone or context.

You’ll need a release valve. When inbound spikes, focus Tier 1 on accounts above a set ARR floor, then backfill Tier 2 within 72 hours. Document this so no one argues priorities mid-crisis. Clear rules remove friction and keep your best people focused where it matters most.

The Hidden Costs Of Missed And Late Interventions

Late interventions are expensive because they multiply work across teams. Delays inflate support macros, CSM makegoods, and misaligned product sprints. The longer you wait, the more hours you spend on the wrong work, and the harder it is to recover revenue at renewal.

Engineering hours lost to rework

Let’s pretend a login loop drives 120 tickets per month, 60% high-effort, with churn risk present in a third. Detection lags two weeks. Product ships unrelated work. Support writes two new macros. CSMs conduct appeasement calls. You’ve now allocated dozens of hours across three teams to manage a problem that a one-sprint fix could have eliminated.

Multiply that across quarters. “Temporary” workarounds become permanent. Training decks swell to cover edge cases. Agents memorize exceptions. Engineers context-switch to handle escalations, then rush to catch up on roadmap work. The time cost isn’t just the fix; it’s all the scaffolding built to survive the delay.

That scaffolding accumulates interest. The longer you wait, the more normalization sets in, “that’s just how onboarding works”, until the churn number forces a reset. Better to quantify the rework early and trade a week of sprint time for a month of saved friction.

How much does a one-week delay cost?

Directional math is your friend. Take weekly volume for a high-risk driver, estimate severity, and apply an ARR lens. If a driver generates 30 high-risk tickets per week across large accounts and 10% convert to churn without intervention, a one-week delay risks three saves. Even at modest ACVs, that’s real money.

You don’t need perfect precision to focus attention. Combine volume × severity × ARR with a confidence range. Present the low and high cases. Then ask the only question that matters: “Is one sprint on the fix cheaper than one week of continued loss?” Nine times out of ten, it is.

If you need a framing to socialize the cost conversation, guides like GTMnow’s customer retention primer and Amplitude’s Product Analytics Playbook emphasize tying observable behavior to revenue impact. Same idea here, just anchored in support conversations and ticket-level evidence.

Still managing churn signals in spreadsheets and gut feel? There’s a faster path. Learn More.

What It Feels Like When Risk Turns Into Churn

When risk turns into churn, it doesn’t feel like a dramatic plot twist. It feels like a slow drip of preventable friction. The cues were there, high effort, repeated drivers, frustrated quotes, but nothing bridged insight to action fast enough to matter.

When a high-value account slips quietly

You see a trickle of billing-confusion tickets from a top account. Different contacts, same theme. Nobody’s checking the driver rollup weekly. No owner for outreach. No SLO. Renewal shows up and the stakeholder brings a list of grievances you’ve technically “handled.” You scramble for credits, call in a VP, and still lose leverage.

Now flip it. You spot churn risk in 24 hours because the driver crosses your threshold. The CSM reaches out with three representative quotes and a precise description of the issue. Support confirms it’s a recurring pattern. Product has a one-page brief in the weekly review. You may still need to discount. But you’re negotiating with momentum and a fix path, not excuses.

A 6-Step Workflow That Turns Ticket Signals Into Retention Wins

A practical churn-prevention workflow turns signals into owners, owners into outreach, and outreach into product changes you can measure. It’s repeatable, boring in a good way, and built to run weekly without heroics.

Step 1: Define signals, drivers, and canonical tags

Start by codifying the signals you care about: churn risk Yes/No, high/low effort, frustration cues, and any custom metrics that match your language. Create a concise driver list and map raw tags to canonical tags so your analysis speaks in leadership-ready categories rather than one-off phrases.

Document examples and non-examples so reviewers align on what “churn risk” looks like in your context. Then save standard Data Explorer views, “Churn Risk Yes by Driver,” “High Effort by Segment,” “Negative Sentiment by Account Tier.” Governance starts simple and evolves monthly. The goal isn’t perfection; it’s consistency you can trust and audit.

Step 2: Score, threshold, and prioritize with SLOs

Build a volume-by-severity matrix. For example: churn risk Yes + high effort + enterprise segment = Tier 1; churn risk Yes + low effort + SMB = Tier 2; all else = Tier 3 monitoring. Assign SLOs: 24 hours for Tier 1, 48 hours for Tier 2. Publish the rules where everyone can find them.

Capacity matters. Cap weekly outreach and, during spikes, auto-focus Tier 1 work on your largest ARR impact. Make the throttle rules explicit, who pauses, who pivots, and how you’ll catch up. The clarity removes debate and turns resource constraints into managed trade-offs.

Step 3: Triage, routing, and ownership handoffs

Route by metric and driver. Support owns operational fixes and verifies tags. CSM owns outreach for renewal-risk cohorts. Product owns remediation briefs for recurring drivers crossing thresholds. Every handoff includes an insight snippet, the top quote, the driver, counts for the last 30 days, and links to representative tickets.

This “handoff packet” prevents drift. It reduces the back-and-forth that eats a day here, two days there. It also standardizes communication style so customers hear a consistent voice whether the note comes from Support or the CSM. Less friction, faster time to first action.

Step 4: Execute outreach with templates and escalations

Create short templates per driver. Acknowledge the issue, reference the exact friction (“I’m looking at three recent examples from your team: X, Y, and Z”), offer the next best step, and set expectations on timing. Keep it human. Keep it specific. Then send within your SLO.

Track time to first contact and a simple resolution-intent flag (“satisfied,” “pending fix,” “escalated risk”). If a Tier 1 account hasn’t responded in 72 hours, escalate to a manager. One line can save a renewal: “We’ve prioritized the fix and here’s what will change by next week.”

Step 5: Convert evidence into product remediation

Use a one-page brief to reduce debate. Include the driver, volume, severity, affected segments, the top three quotes, hypothesized root cause, the success metric, and acceptance criteria. Add a prioritization checklist, impact, urgency, effort, to give product a clean decision.

Attach links to the exact tickets. That single move collapses “is this representative?” into “yes, here are the examples.” Product can make a go/no-go call in minutes, not meetings. It’s the evidence that accelerates prioritization, not louder arguments.

Step 6: Measure impact and close the loop

Define a weekly measurement routine. Track retention KPIs for targeted cohorts, recurrence by driver, time to first contact, and post-fix sentiment or effort shifts. Where possible, use A/B cohorts: targeted vs. untargeted, or pre- vs. post-fix windows with similar volumes.

Share a before-and-after view with quotes. Update playbooks and thresholds monthly. The point is learning velocity. If something isn’t moving the needle, adjust the target, the outreach, or the product criteria. Resources like Helpjuice’s customer retention strategies underscore the same pattern: define, act, measure, refine.

How Revelir AI Operationalizes This Playbook End To End

Operationalizing this playbook requires two things: trustworthy metrics across 100% of tickets and fast paths from aggregate to evidence. Revelir delivers both by turning every conversation into structured, traceable fields, so routing, SLOs, and remediation briefings run on facts, not hunches.

Evidence-backed traceability links every metric to quotes

Revelir makes every aggregate clickable to the exact conversations and quotes behind it. That means a CSM can lift a line into outreach in seconds, and a PM can scan representative tickets to validate root cause without a manual hunt. It’s not just convenient. It’s how you maintain trust in the busiest moments.

Because the traceability is built in, your “handoff packet” practically builds itself: metric, driver, counts, and links that open directly to transcripts. When challenged in a meeting, you click through to proof. That shortens cycles, reduces debate, and gets fixes into sprints faster, exactly where the costs of delay stack up.

Data Explorer, Analyze Data, and flexible ingestion

Revelir’s Data Explorer works like a pivot table for your tickets: filter by churn risk, effort, driver, segment; add or remove columns; sort by volume or severity; then jump straight into Conversation Insights for validation. The Analyze Data feature groups distributions (e.g., churn risk by driver) and shows clickable counts that route you into real examples.

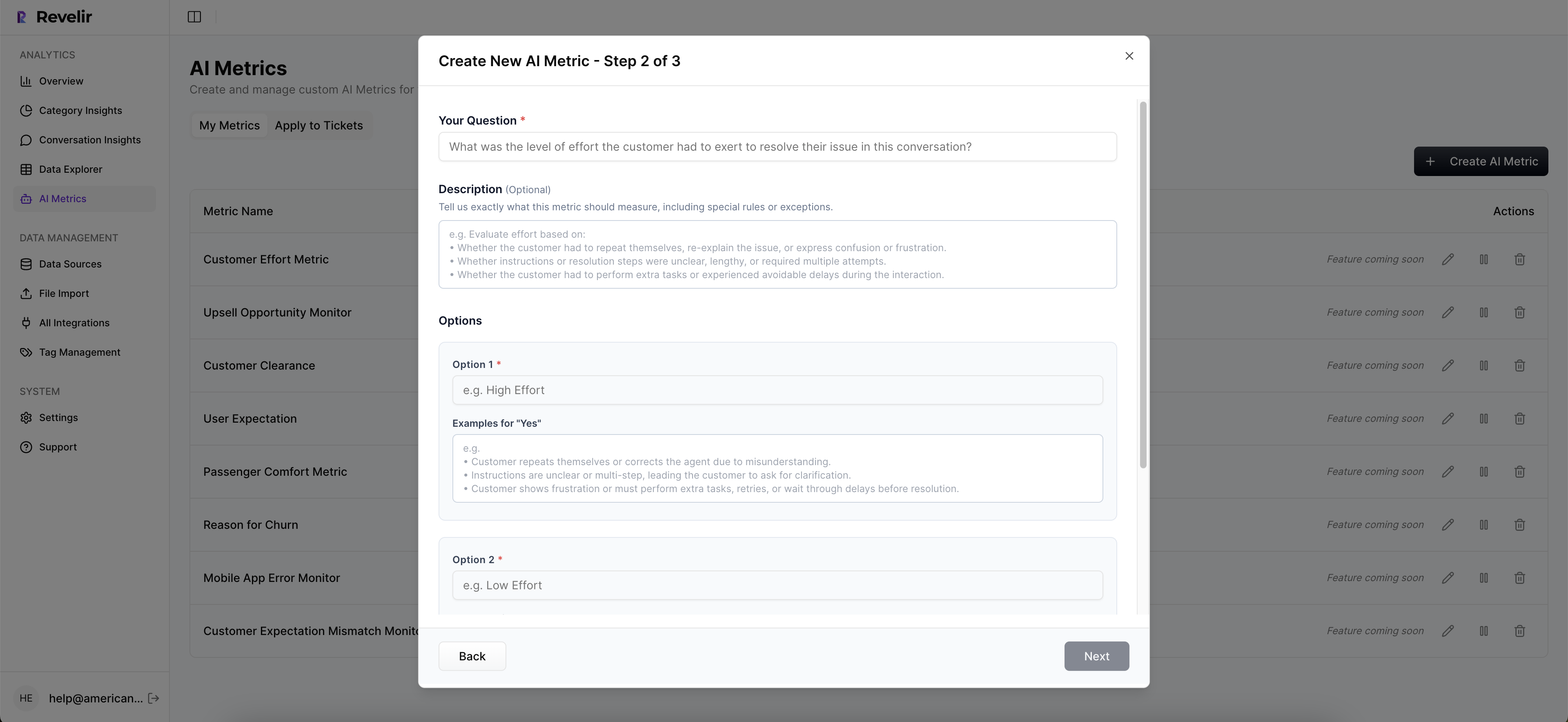

Getting data in is straightforward. You can connect Zendesk for ongoing sync or upload CSVs to start quickly. Revelir processes 100% of tickets, no sampling, and enriches them with AI Metrics like Sentiment, Churn Risk, and Customer Effort (with safeguards when effort lacks signal). You can also define Custom AI Metrics (like Reason for Churn or Upsell Opportunity) and maintain a clean driver framework that leadership understands.

This combination is what enables the workflow above. Define signals and drivers. Threshold and route with SLOs. Execute outreach with quotes. Convert evidence into one-page remediation briefs. Measure impact weekly. Revelir keeps each step fast, auditable, and aligned to revenue.

Ready to operationalize churn prevention without rebuilding your stack? Get Started With Revelir AI (Webflow).

Conclusion

You don’t need another dashboard. You need a way to turn what customers actually said into metrics your teams can route, act on, and defend. When churn risk, effort, and drivers live at the ticket level, with quotes attached, ownership becomes obvious, SLOs stick, and product fixes land sooner. That’s how you move from smoke to fire, from insight to renewal.

Frequently Asked Questions

How do I identify the top drivers of churn risk?

To identify the top drivers of churn risk, you can use Revelir AI's Analyze Data feature. Start by filtering your dataset for tickets marked with 'Churn Risk = Yes'. Then, click on 'Analyze Data' and select 'Churn Risk' as the metric to analyze. Group the results by 'Driver' or 'Canonical Tag' to see which issues are contributing most to churn risk. This will help you pinpoint specific areas that need attention and action, allowing your team to address them proactively.

What if I need to prioritize fixes based on customer effort?

If you want to prioritize fixes based on customer effort, use Revelir AI's Data Explorer. Filter tickets by 'Customer Effort = High' to identify where customers are experiencing the most friction. Next, analyze the results by grouping them by 'Canonical Tag' or 'Driver' to understand the context behind the high effort. This process will help you focus on the most impactful issues first, ensuring that your team addresses the problems that matter most to your customers.

Can I track sentiment changes over time?

Yes, you can track sentiment changes over time using Revelir AI. In the Data Explorer, set a date range to filter your ticket data. Then, add sentiment as a column and group by 'Date' or 'Canonical Tag'. This will allow you to visualize how sentiment evolves across different periods or categories. By regularly reviewing these trends, you can identify patterns and take timely actions to improve customer satisfaction.

When should I run a churn risk analysis?

You should run a churn risk analysis regularly, especially after significant product changes or customer feedback spikes. Use Revelir AI's Analyze Data feature to filter for churn risk tickets and analyze the data by driver. This will help you understand if recent changes have impacted customer satisfaction negatively or if new issues have emerged. Regular analysis helps you stay proactive in addressing potential churn before it affects your business.

Why does my team need to validate insights with real conversations?

Validating insights with real conversations is crucial because it ensures that the data reflects actual customer experiences. With Revelir AI, you can click into any metric in the Data Explorer to access Conversation Insights, where you can review the full transcripts and AI-generated summaries. This practice helps your team confirm that the patterns and trends identified in the data align with the reality of customer interactions, fostering trust in the insights and guiding effective decision-making.