Most teams have customer scores at their fingertips. NPS goes up, CSAT dips, someone sends a screenshot. And then… stalemate. You can feel a problem, but you can’t point to what to fix tomorrow. It’s usually not a lack of data. It’s the lack of drivers and proof.

Here’s the part nobody says out loud: when insights aren’t traceable, meetings turn into debates. Product says “show me where this came from.” Finance asks “what’s the ROI?” Without drivers and quotes, you’re left with vibes. You need metrics you can audit, click into, and defend in the room that funds the work.

Key Takeaways:

- Treat scores as a direction, not a decision, pair them with drivers tied to real conversations

- Design metrics backward from decisions: prioritization, churn triage, and coaching

- Eliminate sampling; process 100% of tickets so early risk signals don’t hide

- Make every aggregate traceable to conversations and quotes in one or two clicks

- Operationalize a hybrid taxonomy (raw tags → canonical tags → drivers) and review cadence

- Build a validation loop with acceptance criteria before a metric hits leadership

- Tie dashboards to playbooks so spikes trigger action, not meetings

Ready to skip the theory and see traceable CX metrics in action? See How Revelir AI Works.

Why Scores Without Drivers Lead To Debates, Not Decisions

Scores without drivers slow decisions because they lack the “why” behind movement. Pairing scores with drivers and quotes turns sentiment into fixable issues leadership will fund. A team sees “onboarding confusion” drove 36 negative tickets last month and shows three quotes, now it’s a project, not a debate.

What Happens When You Optimize For NPS And CSAT In Isolation?

Optimizing NPS or CSAT alone creates false certainty. You get a trend line, not the root cause. The score tells you “something’s off.” It doesn’t tell you whether new users are stuck on setup or if enterprise workflows are breaking. Same thing with sentiment tiles, they’re loud, but thin.

When you attach drivers and traceable evidence, the conversation shifts. “Sentiment down” becomes “onboarding confusion among new accounts.” Now we’re talking concrete fixes: repair the OAuth flow, rewrite step three, update the empty state. You move from “feelings about the score” to “fund the driver.” That’s the job.

The Difference Between A Score And A Driver You Can Fund

A score says “down two points.” A driver says “36 negative tickets from new-user onboarding confusion” and shows where they got stuck. One is a warning light. The other is a work order you can staff. Leadership funds clarity.

Design your metrics so every trend resolves into canonical drivers and representative conversations. That means your taxonomy has to be real-world: raw tags for discovery, canonical tags for consistency, and drivers for narrative. When your score rolls up into drivers, with quotes, you’ve got a funding case, not a plea.

Evidence Beats Anecdotes In The Room Where Priorities Are Set

The first skeptical question is predictable: “Show me an example.” If you can’t click from a bar to tickets and quotes, you lose momentum. People get worried about cherry-picking. Meetings drift.

Build traceability into your metrics from day one. Treat “two clicks to evidence” as a non, negotiable requirement. When an exec sees the exact conversations behind a number, the discussion flips from “is this real?” to “what’s the plan?” That’s how insight becomes action, not meeting fodder. For a broader view on measurement basics, compare guidance like Nextiva’s CX measurement overview with your current stack and gap-check traceability.

Design Measurement Around Decisions, Not Data Exhaust

Decision-first measurement starts from weekly choices, what to fix, who to save, where to coach, then works backward to the metrics and drivers. A traceable metric links an aggregate to specific conversations and quotes for audit. Assign metric owners and cadences so quality doesn’t drift and confidence stays high.

Define The Decisions First: Prioritization, Churn Triage, Coaching

Start with the decisions you actually make. Not vanity dashboards, the calls you make every week. What should product fix first. Which high-risk accounts need outreach. Where to coach agents. If a metric doesn’t change one of those, demote it to a view or cut it.

From there, back into the metric design. If you need to prioritize fixes, you’ll want sentiment by driver, churn density by category, and effort patterns for workflows. If you need proactive saves, you’ll want churn-risk flags with the specific drivers attached. Tie each metric to the moment it earns its keep. As a reference point, frameworks like Walker’s guidance on CX measurement fit can help pressure-test whether your metrics map to decisions.

What Is A Traceable Metric In Practice?

A traceable metric lets you click from the aggregate into the exact conversations and quotes behind it. You can pivot by driver, tag, or segment, then open transcripts to verify “yes, that’s what customers actually said.” It’s auditable, repeatable, and defensible, especially when stakes are high.

Set this as a standard in your taxonomy and dashboards. If the number can’t be audited, it doesn’t ship to leadership. This avoids the “trust me” dynamic that kills momentum. Over time, you’ll find the team moves faster because the proof comes built, in not bolted on.

The Hidden Costs Of Score Watching And Sampling

Score watching and sampling drain time and hide risk. Teams burn hours exporting CSVs and arguing representativeness instead of fixing problems. Sampling misses early churn cues, turning small issues into escalations. Full coverage with consistent tagging changes the math: less debate, earlier detection, fewer surprises.

Engineering And Ops Hours Lost To Rework And Debate

Every unsourced chart invites debate. Someone exports data. Someone else refactors columns. Hours vanish. Then the argument shifts to “is the sample representative?” while customers wait. It’s frustrating rework, and it compounds. Meanwhile, engineering works on the wrong fix because the “why” is fuzzy.

Quantify it. Let’s pretend you do two “insight pulls” a month, each eating 6–8 hours across CX, analytics, and product. That’s a sprint day gone, every month, to plumbing and persuasion. Invest that once into a defensible, traceable measurement layer and you get the time back, every time.

Sampling Bias Hides Churn Risk And Delays Fixes

Sampling 10% of 1,000 tickets is five hours for a partial view. You still may miss the churn signal. The delay turns into escalations, backlog, and burnout. It’s usually the quiet pattern that hurts most, like subtle onboarding friction in a specific segment, because nobody’s checking the full population.

Full coverage eliminates ambiguity. When every conversation is analyzed and linkable, you pivot confidently across cohorts and product areas. You detect risk before it spreads, and you stop arguing about “what’s representative.” Even broad studies, like Get Thematic’s CX statistics roundup, point to the cost of blind spots, your job is to remove them in your stack.

Let’s Pretend You Handle 1,000 Tickets A Month

You sample 100, miss early login failures, and ship a doc update instead of fixing auth. Two sprints later, escalations spike, high‑effort conversations climb, renewals wobble. Now the room is tense, and you’re asking for emergency engineering time.

A driver‑based metric would’ve shown Account Access as the top driver of negative sentiment with quotes you could show on slide two. Different decision. Earlier. Cheaper. This isn’t hypothetical, it’s how traceability pays for itself in avoided firefights.

Still stuck in manual reviews and sampling? There’s a faster path. See How Revelir AI Works.

When Trust Breaks, Dashboards Lose The Room

Trust breaks when people can’t see where a number came from. If you can click from a chart to tickets and quotes, you keep credibility. Leaders want proof, not poetry. Build auditability into your stack so incident reviews, QBRs, and roadmap debates stay focused on action.

The Finance Leader’s Question You Must Be Ready For

“Show me where this came from.” If you can’t answer that in two clicks, expect the budget conversation to stall. Finance isn’t allergic to CX; they’re allergic to unverified claims. Make it routine to attach three representative conversations to any requested investment.

Do this often enough and the tone in the room changes. Hard asks become clearer asks: “We’re funding this driver because here’s the volume, here’s the impact, and here’s what customers actually said.” That’s when finance leans in rather than leans back.

When Your Biggest Account Says They Feel Ignored

A CSM needs specifics, fast. Churn‑risk flags paired with drivers lead to better outreach. Instead of “we’re sorry,” you can say, “we saw three account access issues in the last two weeks; here are the exact conversations; here’s what we’re fixing.”

It’s personal, timely, and credible. And the internal effect is alignment, not debate. Product sees the same evidence. Support sees the same trend. Leadership sees the same risk. You stop persuading and start repairing.

Why Your Team Needs Auditability During Incidents

Incidents create heat. People are worried about brand damage and renewals. Without traceability, loud anecdotes dominate; coaching gets scattershot; product gets vague asks. Auditability calms the room.

Traceability separates real patterns from noise. You’ve got a concise defect brief tied to quotes. Coaching targets the moments that matter. It’s how you move fast without guesswork, and how you recover trust with customers and internally. If you need a checklist to avoid vanity metrics during chaos, articles like InMoment’s CX metrics guidance can help pressure-test your coverage.

A Practical Playbook For Traceable, Driver-Based CX Metrics

A practical approach starts with a hybrid taxonomy, adds conversation‑derived metrics with clear rules, and enforces a validation loop before anything hits leadership. Operationalize it with saved views, alert thresholds, and playbooks so spikes trigger action, not another meeting.

Design The Hybrid Taxonomy: Raw Tags To Canonical Tags To Drivers

Start wide with AI‑generated raw tags to surface granular themes and emerging issues. Then group them into canonical tags your leadership actually recognizes. Finally, roll those canonical tags into drivers like Billing, Onboarding, or Account Access. That ladder, from discovery to clarity to narrative, is the backbone of reporting.

Govern it. Decide who can merge tags, how often audits run, and how new patterns get introduced. It won’t be perfect on day one. That’s fine. The point is stability over time and the ability to evolve as language, and your product, changes. Document the rules, then revisit quarterly.

Instrument Conversation-Derived Metrics With Concrete Rules

Enable core signals: Sentiment (positive, neutral, negative), Churn Risk (yes/no), and Effort (high/low). Make the rules explicit. Effort requires enough back, and forth; if it’s not present, show empty values or zero counts rather than guessing. This avoids misleading classifications that erode trust.

Add custom metrics only if they change a decision. Example: a “Reason for Churn” classifier for renewal strategy, or “Expectation Mismatch” for roadmap input. Keep a short list, and tie each to its decision owner. Interjection. Don’t confuse optional curiosity metrics with decision fuel.

Build A Validation Loop That Proves Every Tile

Adopt a simple checklist before a metric hits the exec view. For any tile, click to tickets. Read a sample and confirm labels match human judgment. Verify the driver grouping aligns with how you’d explain it in a board deck. Only then promote it to the core dashboard.

Set acceptance criteria to reduce drift, like 90% agreement on spot checks. Schedule weekly or monthly reviews by metric owners to confirm the system still “makes sense.” This isn’t red tape; it’s how you keep speed and trust at the same time.

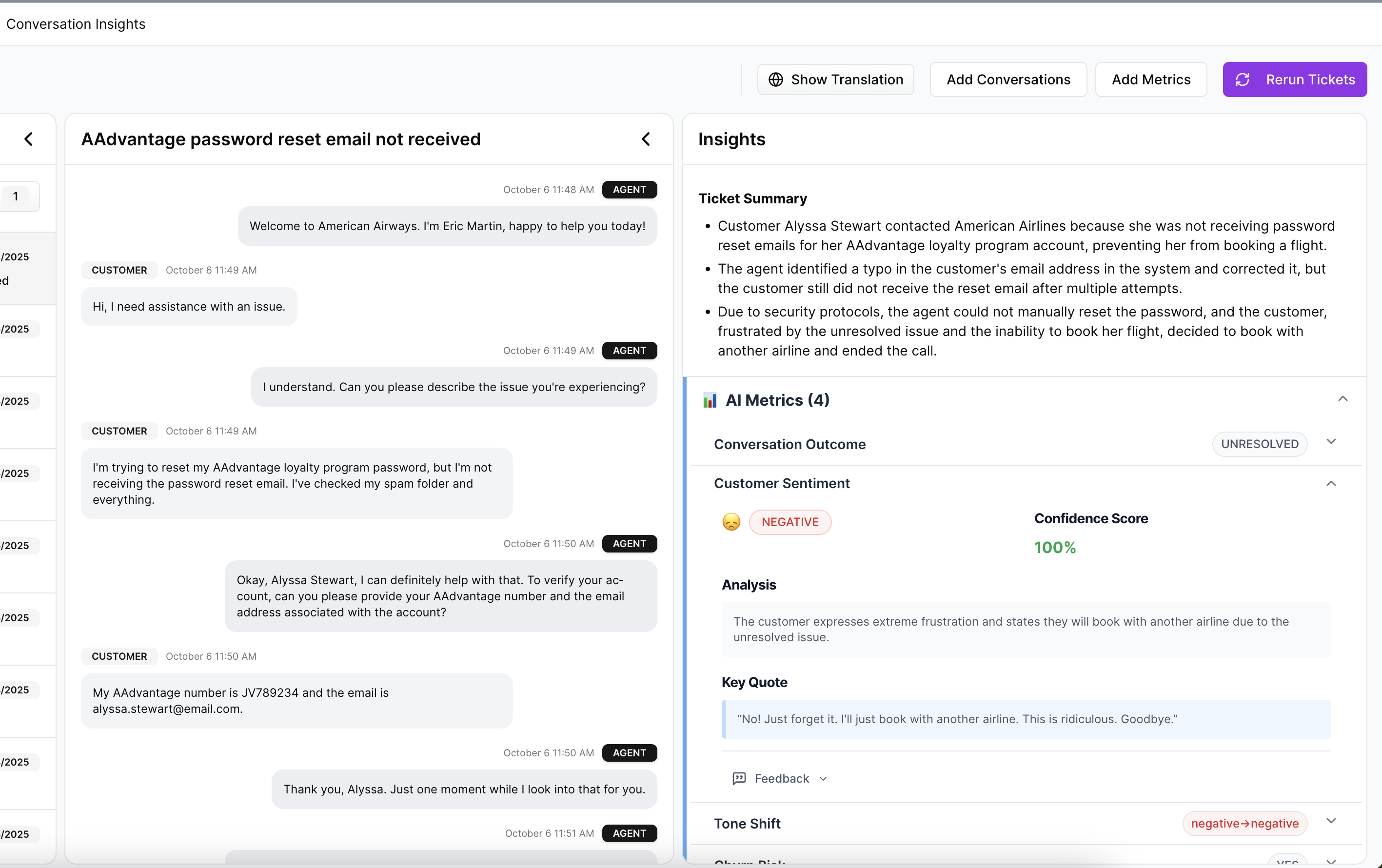

How Revelir AI Operationalizes Driver-Based, Traceable CX Metrics

Revelir turns raw support conversations into evidence‑backed, driver‑based metrics you can trust. It processes 100% of tickets, applies AI metrics and hybrid tagging, and makes every aggregate traceable to transcripts and quotes. The result is faster decisions, fewer escalations, and dashboards that hold up in tough rooms.

Full Coverage Processing With Traceable Drill-Down

Revelir analyzes every ticket, no sampling, so early churn signals don’t hide. You can pivot by driver, canonical tag, or segment and click any number to jump into the underlying conversations. Each transcript comes with an AI summary and the tags and metrics that produced the aggregate, so “show me an example” is always a two, click move.

This closes the trust gap that slows decisions. When product asks for proof, you have it. When finance asks about impact, you can show volume, severity, and the exact words customers used. It’s evidence, not anecdotes.

AI Metrics And Hybrid Tagging That Reflect Your Language

Out of the box, Revelir assigns sentiment, churn risk, and customer effort (when detectable). AI‑generated raw tags surface granular themes; canonical tags provide consistency; drivers give leadership context. Over time, you refine mappings so future tickets roll up correctly, keeping reports stable even as customer language shifts.

The point isn’t perfection on every ticket. It’s a trustworthy threshold where patterns hold up every time you open the dashboard, and where you can always click through to verify. That’s how you move from volume charts to real, fundable drivers.

Analyze Data And Data Explorer For Grouped Insight

Data Explorer is where you work day, to, day, filter by sentiment, churn risk, drivers, or custom metrics; add/remove columns; and slice the dataset like a pivot table. Analyze Data runs grouped analyses (e.g., Sentiment by Driver) with counts and percentages, so prioritization is obvious, not argued.

From any group, click through to Conversation Insights to validate examples before you act. This tight loop, aggregate, inspect, decide, shrinks the distance from “we think” to “we know” to “we shipped the fix.”

If you’re ready to operationalize driver‑based, traceable CX metrics without building the plumbing yourself, it’s straightforward. Get Started With Revelir AI and see evidence‑backed insights on your own tickets in minutes.

Conclusion

Scores point. Drivers decide. When every metric rolls up to drivers and links back to real conversations, you stop debating and start fixing. You’ll catch churn risk earlier, prioritize with confidence, and walk into leadership reviews armed with evidence, not hunches. That’s the shift, from score‑watching to decisions that stick.

Frequently Asked Questions

How do I analyze customer sentiment trends over time?

To analyze customer sentiment trends using Revelir AI, start by opening the Data Explorer. 1) Apply filters for the time period you're interested in, such as the last month or quarter. 2) Use the 'Sentiment' filter to isolate conversations labeled as positive, neutral, or negative. 3) Click on 'Analyze Data' and choose to group by 'Date' or 'Canonical Tag' to see how sentiment changes over time. This will help you identify patterns and trends in customer feedback, allowing for informed decision-making.

What if I want to identify high-risk customers?

To identify high-risk customers using Revelir AI, you can utilize the churn risk metric. 1) Go to the Data Explorer and apply a filter for 'Churn Risk' set to 'Yes.' 2) Review the resulting tickets to understand the underlying issues causing churn signals. 3) Use the Conversation Insights feature to drill down into individual tickets and gather specific quotes or context that can guide your outreach efforts. This proactive approach can help you address potential churn before it impacts your business.

Can I customize the metrics in Revelir AI?

Yes, you can customize the metrics in Revelir AI to better fit your business needs. 1) Navigate to the settings where you can define custom AI metrics that reflect your specific terminology, such as 'Upsell Opportunity' or 'Reason for Churn.' 2) Specify the questions you want the AI to answer and the possible values for each metric. 3) Once set up, these custom metrics will be applied consistently across your conversations, providing tailored insights that align with your business objectives.

When should I validate insights from Revelir AI?

It's best to validate insights from Revelir AI regularly, especially before presenting findings to leadership. 1) After running analyses in the Data Explorer, click into specific segments to review the underlying tickets. 2) Use the Conversation Insights feature to check that the metrics align with the actual conversations. 3) This validation process ensures that the data is accurate and trustworthy, helping you make informed decisions based on reliable evidence.

Why does my analysis show empty values for customer effort?

If your analysis in Revelir AI shows empty values for customer effort, it typically means that the dataset lacks sufficient conversational cues to support the metric's accuracy. 1) Ensure that the dataset includes enough back-and-forth messaging; otherwise, the customer effort metric will not be enabled. 2) If you are using a new dataset, consider running a larger sample of conversations to gather enough data for reliable metrics. 3) Regularly review and refine your data ingestion process to capture comprehensive customer interactions.