You want agent coaching that actually moves sentiment, not another binder of scripts. That means you need to know which phrases, moments, and sequences correlate with customers getting frustrated—and you need proof you can show in the room. Opinions don’t survive exec review. Evidence does.

Here’s the shift: stop sampling, stop debating anecdotes, and start measuring 100% of conversations with traceable metrics. It’s usually a tagging and trust problem, not a will problem. Once you fix that, the 90‑day path to real lift is clear.

Key Takeaways:

- Stop sampling—analyze 100% of conversations so coaching targets aren’t biased by anecdotes

- Pair sentiment with drivers and quotes to turn “be nicer” into specific coachable behaviors

- Define sentinel cohorts, set a clean baseline, and freeze definitions during the coaching window

- Build micro‑lessons from real quotes; keep modules tiny and practice weekly

- Run simple control vs. coaching experiments to isolate lift and avoid false attribution

- Use a traceable system so every KPI rolls up to tickets you can open in one click

Why Most Coaching Programs Stall on Anecdotes

Most coaching programs stall because they run on partial views—handfuls of calls, a few CSAT verbatims, and gut feel. The key signal lives in the transcripts, not the dashboard tiles. When you only skim a sliver, you miss early churn cues and tonal triggers that should shape coaching.

The sampling trap keeps you guessing

Sampling seems efficient. It isn’t. You hardwire delay and bias into your coaching loop when you read 10% and assume the other 90% behaves the same. The quiet but costly patterns—onboarding friction, expectation mismatches—rarely yell. They whisper. And nobody’s checking the whisper.

We’ve all seen this play out. A spike hits, leaders read a batch of “representative” tickets, and coaching pivots to the loudest theme. Same thing with quarterly reviews where one gnarly thread gets airtime and becomes the plan. The fix isn’t more reading; it’s full‑population analysis with traceability so debates about representativeness stop and behavior change starts.

When you analyze 100% of conversations, you get coverage and confidence. You can pivot by cohort, driver, or tag, then drill to exact quotes. Now your coaching targets aren’t guesses—they’re patterns that hold up when someone asks “Where did this come from?”

Why scores without drivers mislead plans

A downward sentiment curve tells you something’s wrong. It doesn’t tell you what to fix. Scores alone push teams toward generic apologies and broad scripts. That wastes cycles and erodes trust. What you need is sentiment with drivers and frustration signals, linked to quotes you can put in front of agents.

Tie the metric to the cause: “Negative sentiment among new customers is driven by setup confusion and refund expectations.” Then show the words that trigger the tag. Now your coaching is concrete: the phrase to avoid, the sequencing to swap, the expectation to set earlier. If you want a solid bridge from research themes to coaching targets, aligning themes to measurable agent behaviors is the move. For practical guidance, see this overview on aligning research themes with coaching metrics.

Traceability tightens the loop. When a leader asks why, you click the number and open the conversation. No stitched spreadsheets. No debate over cherry‑picking. Just the evidence, front and center.

Ready to stop guessing and coach on evidence? See the workflow end‑to‑end. See how Revelir AI works.

Define Success So You Can Prove Change

Coaching only “works” when you can attribute lift to the program, not to noise. Define sentinel cohorts up front, pick defensible KPIs agents can influence, and lock your baseline. Then measure pre vs. post using the same definitions and windows.

What is a sentinel cohort and why use it?

A sentinel cohort is the segment where sentiment really matters for the business and where coaching can realistically move the needle. Think new customers in their first 30 days, enterprise accounts in renewal, or your top ARR tier. The point is focus. Not every ticket should influence your coaching KPI in the same way.

Start simple. Pick two or three cohorts. Write them down. Then make sure your analysis can filter by those cohorts consistently—same fields, same timeframes. This avoids mix effects (one cohort improving while another worsens) and gives you cleaner attribution when a manager asks, “Did coaching help, or did we just have fewer billing issues this month?” And yes, aligning “themes” with cohorts makes your coaching targets measurable across segments—helpful when you move from pilot to rollout like in the themes-to-metrics approach.

When you scope cohorts early, you cut arguments later. You also make it easier to run A/B coaching at the agent level inside each cohort, which boosts statistical power without a research headache.

Choose business-weighted KPIs you can defend

Measure percent negative sentiment, but weight it with severity signals your execs respect. Combine negative share with churn‑risk density or high‑effort share inside each sentinel cohort. If you care about retention, a small drop in negative paired with a big drop in churn‑risk flags is the win.

Agents can influence tone, expectation setting, and escalation prevention. They can’t fix a product defect today. So separate “agent‑influence” metrics (tone, effort, resolution path) from “product‑influence” metrics (bug frequency, missing capability). Then report both. If we’re being honest, mixed KPIs invite finger‑pointing. Cleaner separation keeps coaching accountable and productive.

Keep KPIs simple enough to explain on a slide and stable enough to hold up across weeks. Over‑engineered composites look smart until they’re impossible to reproduce.

Establish a clean baseline and time window

Lock a pre‑period baseline by cohort—say the last 30 or 60 days. Freeze definitions during the coaching window: tags, drivers, and KPI formulas. Moving targets kill confidence. Use rolling windows (e.g., 14‑day) to dampen weekly variance, then compare pre vs. post in the same cohorts with the same agents.

If you want a refresher on pre/post rigor, there’s a clear overview of establishing baselines and comparison windows in this primer on pre‑ versus post‑analyses. The principle is simple: define before you measure, and keep the frame steady while you test.

When something big ships mid‑test—a policy change, a new refund flow—flag the dates and run a quick “did the curve break?” check. You don’t need a PhD here, just discipline.

The Hidden Costs of Blind Coaching

Blind coaching looks cheap. It isn’t. Sampling burns hours, misreads patterns, and sends agents chasing the wrong fixes. The bill shows up as rework, escalations, and leadership skepticism.

Let’s pretend you coach on vibes for a quarter

Let’s pretend your org handles 3,000 tickets a month. You sample 10% at three minutes each. That’s 15 hours of reading for a partial view. Over three months? 45 hours. And you still might miss the quiet driver that matters most—onboarding confusion in a niche workflow, for example.

Now add the rework: two weeks on a new empathy script that doesn’t touch the real trigger. Add another week for the post‑mortem after escalations spike. The opportunity cost is the fix you didn’t ship and the behavior you didn’t coach because the sample was off. Analytics‑backed programs avoid this waste and focus time on specific behaviors that correlate with outcomes, as seen in this case on data‑driven coaching for targeted improvements.

The math isn’t dramatic—just relentless. Small sampling mistakes compound into months of missed lift.

The credibility tax when leaders ask for evidence

Claims die at “show me.” If your plan relies on stitched spreadsheets, highlighted quotes, and memory, confidence drops. The meeting pivots from “how fast can we roll this out?” to “how sure are we this is real?” That stall costs you approvals, training time, and momentum.

When every metric links to the exact ticket and quote, you skip the tax. A click, a transcript, the moment it turned. Leaders move faster when they can audit the reasoning, not just hear a summary. You can see this dynamic in action in programs that tie analytics to coaching outcomes, like the evidence‑backed approach documented in UPMC’s coaching case.

One more hidden cost: trust erosion. Once people doubt the source, they doubt the next slide too.

When Coaching Fails Agents Feel It And Customers Do Too

Agents don’t need platitudes; they need plays. Vague guidance creates confusion, rework, and second‑guessing under pressure. Customers feel that wobble in the worst moments.

The 3am escalation you could have prevented

A generic “show more empathy” reminder isn’t a play. In high‑tension moments—refund denials, access lockouts—agents need specific phrases and a cadence to practice them. Without that, escalations spike at 3am when nobody wants to wake the on‑call manager.

Use real quotes to build micro‑modules that match the trigger. “When the customer says X, start with variant A, then ask this check question. If they push, pivot to B.” It sounds basic. It’s not. Live conversations are messy. Precision scripts help agents stay calm and predictable when stakes are high.

Design training like a playbook, not a lecture. One to three behaviors. One sheet. Ten minutes of practice. Agents will actually use it.

How do agents experience unclear guidance?

They feel lost. They rework responses. They second‑guess the “right” tone, then freeze. If guidance changes weekly or examples don’t match what happens in the queue, disengagement creeps in. Coaching stops being helpful and starts feeling like inspection.

Anchor modules in reality—actual transcripts, exact triggers, clear goals. Keep the scope tight so adoption sticks. Honestly, this surprised us more than anything: the smaller the module, the faster the lift. Big programs look impressive; micro‑lessons actually get used.

And give agents a reason to trust the why. When the lesson comes with source quotes, the debate fades and practice starts.

A Practical Playbook You Can Run In 90 Days

A 90‑day coaching loop is enough to set baselines, pilot micro‑modules, and show lift. Keep it simple: select sentinel cohorts, find the top negative drivers from 100% of tickets, build tiny lessons from quotes, and measure pre vs. post.

Pick sentinel cohorts and baseline sentiment

Start with two cohorts: new customers (first 30 days) and top ARR accounts. Lock a 30‑ or 60‑day pre‑period. Capture three numbers for each cohort: percent negative, churn‑risk share, and high‑effort share. Save the views so you can rerun them monthly without rebuilding anything.

Freeze definitions during the pilot—canonical tags, drivers, and KPI formulas. If you tweak the taxonomy midstream, you can’t tell whether coaching moved the needle or the labels did. This is where discipline pays off: a clean baseline makes the rest boring and defensible.

If you need methodological guardrails, revisit this primer on pre/post baselines and comparison windows. You don’t need advanced stats—just consistency.

Query 100 percent of tickets to surface coaching targets

Filter to negative sentiment in each cohort. Group by driver or canonical tag to see where the pain clusters. Click into high‑volume segments and read a handful of tickets. Validate the pattern: Do the examples match the numbers?

You’re looking for three things: the trigger phrase, the sequence misstep, and the expectation gap. Then pick three to five behaviors with the highest negative density. That’s your first batch of coaching targets. Interjection—don’t overthink it. You can iterate in week two.

The shift here is simple: from “we think it’s billing” to “these two phrases in refund conversations drive 40% of the negative.”

Build micro-training from real quotes and behaviors

Make a one‑page template per behavior: the quote, the goal, script variant A and B, and the coach/owner. Keep modules tiny. One to three behaviors per week. Include the verbatim trigger so agents see context, not just theory.

Run practice in team huddles or shadow sessions. Ten minutes is enough. Agents learn faster when the material sounds like their queue, not like a generic call‑center handbook. If you’re mapping research themes to behavior goals, keep the chain intact—from driver to quote to script—so you can trace accountability back up.

Share early wins as examples. Nothing accelerates adoption like “Here’s the ticket. Here’s the response we tried. Here’s the shift we saw.”

Measure lift with controlled experiments

Inside each cohort, assign half the agents to the coaching program and keep half as control. Blind managers to reduce bias. Run two to four weeks of micro‑lessons, then compare pre vs. post for each group using rolling windows.

Note other changes that could confound results—policy updates, outage periods—and mark comparison windows accordingly. You’re not aiming for perfect causal inference, just strong, defensible signal. If you see a sustained drop in percent negative and churn‑risk flags in the coached group, you’re on track.

Rinse and scale. If a behavior moves the metric, keep it. If it doesn’t, swap it. That’s how you cut frustrating rework.

Still coaching on anecdotes and spreadsheets? There’s a faster path. Learn More.

How Revelir AI Turns Sentiment Signals Into a Measurable Coaching Loop

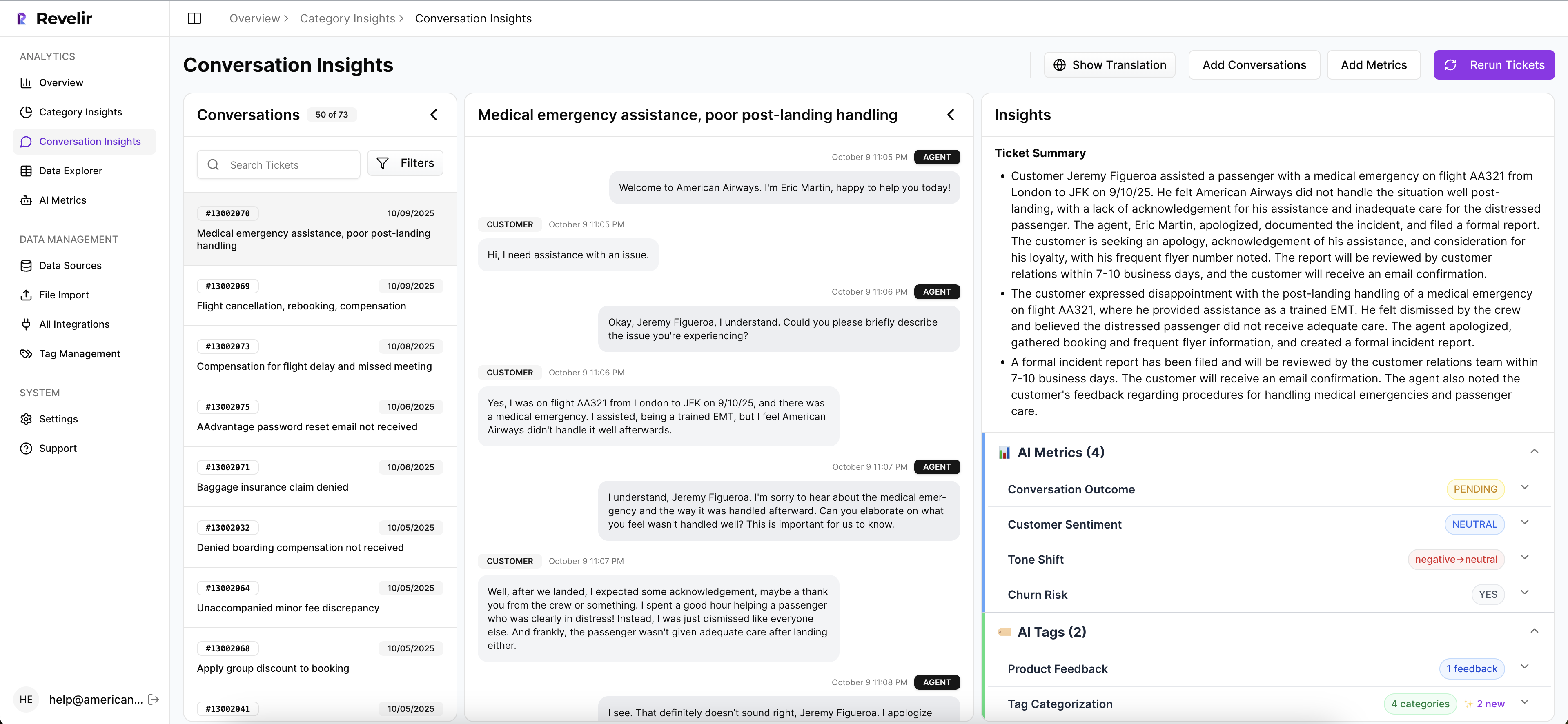

Revelir processes 100% of your conversations and turns them into metrics you can trust: sentiment, churn risk, effort, plus custom AI metrics you define. Every chart links back to the exact ticket and quote. That traceability lets you pick coaching targets, build modules, and prove lift without exporting CSVs.

Full-coverage metrics with traceable evidence

Revelir analyzes all your support tickets—no sampling—so you don’t miss early churn signals or tonal patterns hiding in low‑volume corners. It auto‑computes Sentiment, Churn Risk, and Customer Effort, then preserves a click‑through path to the transcript and the moment the tag fired. Evidence sits one click away.

This matters when stakeholders push on “why.” You can open the ticket and show the words that triggered the metric. It also matters for accountability: when coaching claims map to verifiable examples, approvals move faster. The credibility tax you pay today? It fades.

Tie this back to cost: less time stitching examples, fewer meetings defending the source, more time fixing the behavior that’s actually driving negative sentiment.

Targeting and measurement in Data Explorer

Data Explorer is where you work. Filter by cohort, sentiment, churn risk, or effort. Group by driver or canonical tag to surface patterns. Click into any number to validate examples, then save the view so you can rerun it during your weekly coaching review.

The Analyze Data feature lets you push from “what’s breaking?” to “where exactly is it breaking?” in minutes. Start with “Negative Sentiment by Driver” for your sentinel cohorts, then drill into the top rows and grab quotes for micro‑modules. Next week, rerun the same view and compare.

You move from pattern to quote to module to KPI lift in one session. No data export. No schema rebuild. Just the loop.

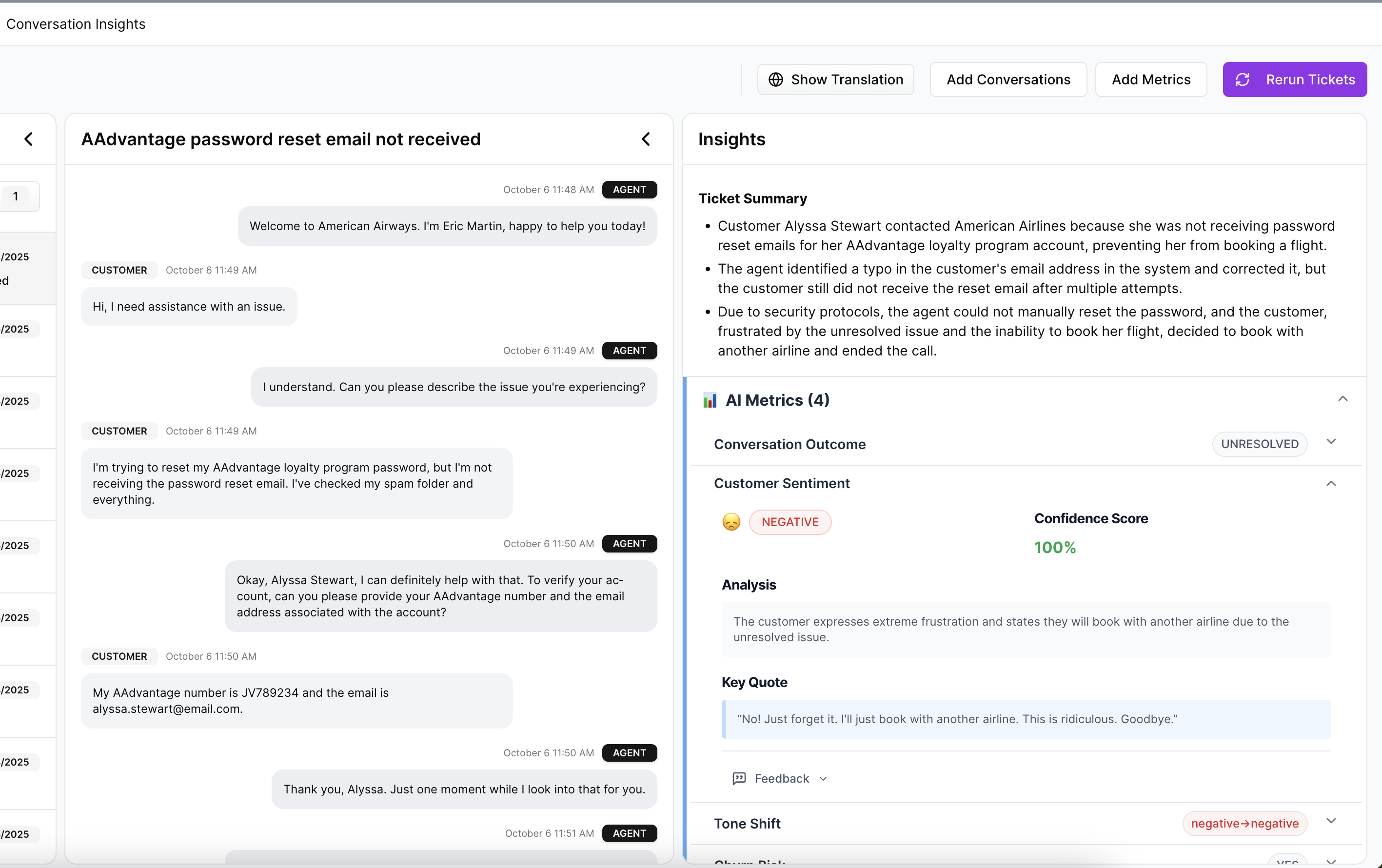

Quote-to-coaching workflow with Conversation Insights

Conversation Insights is the drill‑down. Open a ticket, read the transcript, scan the AI summary, and copy the exact phrase that triggered frustration. Then map it to a behavior goal and script variants in your one‑pager. That’s your micro‑lesson.

Because the evidence lives with the metric, agents see reality, not theory. Adoption improves when modules match how customers actually talk. Managers can also spot edge cases quickly and adjust tags or drivers without breaking the broader taxonomy.

If you need to align with your BI layer, Revelir supports exporting metrics via API so your coaching KPIs show up next to finance or QA dashboards. The point is continuity—from conversation to decision.

Ready to turn 100% of your conversations into evidence‑backed coaching? See how Revelir AI works.

Conclusion

Most coaching misses the mark because it starts with anecdotes and stops at scores. The fix isn’t louder scripts. It’s coverage, drivers, and quotes you can verify. Define sentinel cohorts, lock a baseline, pull targets from 100% of tickets, and build tiny modules agents actually use. Then prove the lift. When your metrics trace to real conversations, coaching stops being a debate and starts being a system.

Frequently Asked Questions

How do I analyze negative sentiment trends over time?

To analyze negative sentiment trends, you can use Revelir AI's Data Explorer. Start by filtering your dataset for 'Sentiment = Negative'. Then, apply a date range filter to focus on the specific time period you're interested in. After that, click 'Analyze Data' and choose 'Sentiment' as your metric. This will generate a table and a chart showing the distribution of negative sentiment over your selected time frame. You can also drill down into specific tickets to see the underlying conversations that contributed to the trends.

What if I need to validate insights from my analysis?

If you want to validate insights, use the Conversation Insights feature in Revelir AI. After running your analysis in Data Explorer, click on any segment count (like the number of negative sentiment tickets) to access the underlying conversations. This allows you to review full transcripts and AI-generated summaries, ensuring that the insights you derived from the metrics align with real customer feedback. This traceability helps you build trust in your findings and supports decision-making.

Can I customize the metrics I track in Revelir AI?

Yes, you can customize the metrics in Revelir AI to align with your business needs. You can define custom AI Metrics that reflect specific aspects of your customer interactions, such as 'Upsell Opportunity' or 'Reason for Churn'. To do this, navigate to the settings in Revelir and create new metrics based on your criteria. This flexibility allows you to tailor the insights you gather from your support conversations, making them more relevant to your team's goals.

When should I use the Analyze Data feature?

You should use the Analyze Data feature in Revelir AI when you need to answer specific questions about your customer interactions. For example, if you want to know what's driving negative sentiment or which issues are affecting high-value customers, this feature allows you to run grouped analyses quickly. Simply select your metrics and dimensions, and Revelir will provide you with a summary of the data, helping you identify trends and prioritize actions based on evidence.

Why does my team need full coverage of support conversations?

Full coverage of support conversations is crucial because it eliminates bias and ensures that you capture all relevant signals from customer interactions. By processing 100% of your tickets, Revelir AI helps you avoid the pitfalls of sampling, which can lead to missed insights and misinformed decisions. This comprehensive approach allows you to detect early churn signals, understand sentiment drivers, and make data-driven decisions that are backed by real customer feedback.