You can treat support metrics like weather reports, or you can treat them like incidents. The first path invites debate and delays. The second creates speed and accountability. This CX Metric Incident Response: Root-Cause Playbook for Conversation Spikes puts incident thinking on your CX data so you move from suspicion to verified cause in hours, not weeks.

We built this after too many calls where leaders argued over trends while customers waited. The pattern repeats. A spike hits, people scramble, someone samples a few tickets, and trust erodes. When every claim ties back to quotes, and every step has an owner, the room calms. You fix the root cause faster. And you stop wasting cycles on the wrong work.

Key Takeaways:

- Treat conversation spikes like incidents with states, owners, and decision gates

- Prove every claim with evidence you can trace to exact tickets and quotes

- Stop sampling during spikes, use full coverage so pivots stay defensible

- Quantify detection and acknowledgment delays to expose hidden costs

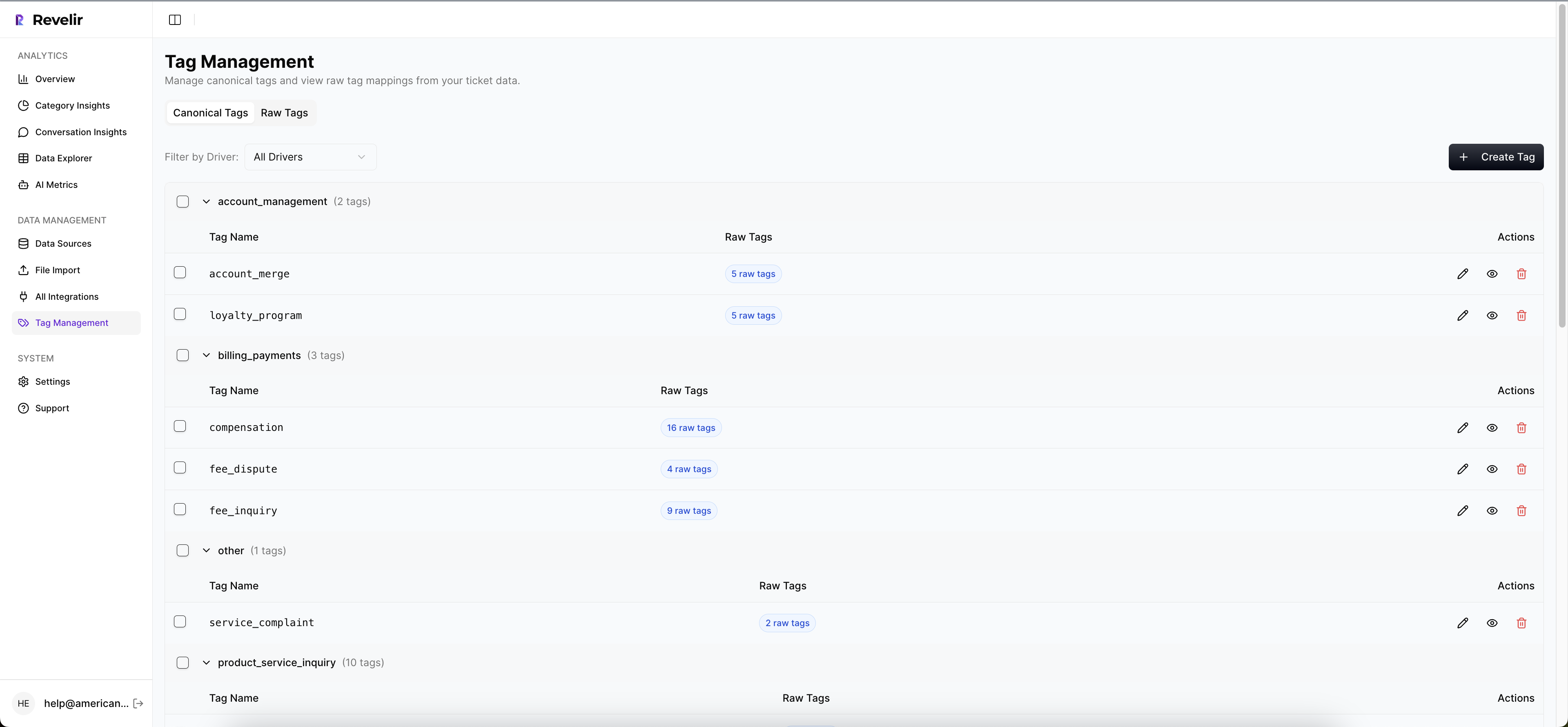

- Stabilize language with raw tags, canonical tags, and drivers

- Use a simple runbook so anyone can triage, validate, and respond at 2 a.m.

CX Metric Incident Response: Root-Cause Playbook for Conversation Spikes Starts With Incident Thinking

Treating metrics as incidents replaces passive dashboards with an active pipeline. You define severity, detection, acknowledgment, containment, and resolution for conversation spikes. You also assign on-call ownership and decision gates. This turns confusion into action and aligns CX, product, and engineering on the same clock.

From Dashboards to Incidents

Passive dashboards tell you what happened. Incident thinking tells you who acts next and when. The difference is structure. You set a severity based on customer impact, create a clear acknowledgment window, then move to containment while root cause is validated. We learned the hard way that vague ownership is a hidden cost. It slows everything.

When a spike appears, you do not argue about screenshots. You treat it like an event. Someone owns detection. Someone else owns validation. A third person owns the comms rhythm. Keep the loop tight and specific. You will cut noise and reduce the risk of the wrong escalation.

Clear States, Owners, and Gates

Incidents run on states and owners. Without those, you drift. We keep it simple, because simple wins under pressure. The team sees the same checklist, moves through the same gates, and knows who says go or no go at each point.

States to define, then adopt:

- Detection: spike suspected, owner paged, time stamped

- Acknowledgment: owner confirms signal, notifies channel, sets next update

- Containment: temporary steps to reduce customer pain while cause is mapped

- Resolution: fix deployed and verified against the same metrics

- Post-incident: short review and annotation of timelines and model changes

CX Metric Incident Response: Root-Cause Playbook for Conversation Spikes Needs Evidence You Can Trace

Evidence stops opinion fights. Require every spike claim to include drivers, affected segments, and three verbatim quotes linked to tickets. The ability to click from aggregate to ticket to quote turns persuasion into proof. It shortens decisions and reduces the risk of the wrong fix.

Proof Over Opinions

Score-only charts invite debate. People ask why, and the room stalls. When each metric links to real transcripts, you can answer in seconds. You show the driver table, then click into two or three conversations that mirror the pattern. Honestly, once you do this a few times, nobody asks for a sample anymore. They can see it.

If you want a formal model for playbooks, there are solid references. The AWS incident playbook guidance is written for security teams, but the structure maps well to CX. You still need your own drivers, your own thresholds, and your own quotes. The principle stands: evidence beats opinions.

Structure Language With Tags and Drivers

Raw tickets are messy. You need a shared vocabulary, or you will miss the root cause. Start with granular raw tags for discovery, then map them to canonical tags the business trusts. Roll those up to drivers leaders understand. You keep nuance without losing clarity. You also make trend comparisons reliable.

The hierarchy that reduces confusion:

- Raw tags: AI-generated, fine-grained signals that surface early patterns

- Canonical tags: human-validated categories that stay stable in reports

- Drivers: executive themes like Billing, Onboarding, or Performance for cross-cut cuts

The Cost Of Slow Spike Response In CX, With Numbers You Can Defend

Slow response has a real cost. Quantify detection, acknowledgment, and validation time so the team sees the waste. A small delay during a surge compounds into backlog, rework, and customer churn risk. Document those minutes, and you will change behavior faster than any pep talk.

Put Numbers on Delay

Let us pretend you average 500 tickets per day. A 150 percent surge for two hours adds roughly 250 tickets. If mean time to detect is 90 minutes and mean time to acknowledge is 30, you are already behind. Each manual review takes five minutes. That is 1,250 minutes of avoidable delay and rising frustration.

The fix is not heroic effort. It is earlier detection, faster acknowledgment, and full coverage validation that takes minutes, not hours. You cut rework because you make fewer wrong guesses. You also protect brand equity, which is hard to measure until it is gone.

Control False Positives and Hygiene

False positives waste time and political capital. A noisy alert interrupts engineers, burns analyst hours, and erodes leadership trust. Set cohort-aware thresholds and require transcript checks before escalation. A quiet hour on validation saves a noisy week later. It is not busywork. It is risk reduction.

Measurement hygiene matters too. If you fail to annotate incident windows and classifier changes, trend lines lie. You will double count volume, mislabel a driver, and ship the wrong fix. Keep version notes and incident annotations. The GuidePoint incident documentation guidance has a practical checklist you can adapt.

The Human Side Of A Metric Spike And How To Keep Trust

Spikes create anxiety. The fastest way to lower the temperature is to show evidence and set a cadence. You say what you will validate, when you will report back, and how you will decide. People relax when they see quotes, not just charts. Morale improves and teams act with clarity.

Calm the Room With Evidence

Set the tone early. Try this line: we have a suspected spike, we will run three queries and read ten tickets before we escalate. Then show two quotes that match the pattern. The room shifts from fear to focus. You protect trust by proving the story, not selling it.

Communication cadence matters as much as the fix. A simple update rhythm, plus clear owners, keeps stakeholders aligned. For a template, the Atlassian incident communication guidance is a good starting point. Adapt it to CX language so it feels natural to your team.

Agents See the Spike First

Frontline agents feel high effort conversations before your dashboard does. If you ignore their signals, you risk missing early churn cues. Pull a few representative transcripts, and ask a senior agent to sanity check driver mappings before you escalate. It costs ten minutes. It saves a week of rework.

Create small wins in the first hour. Pause non urgent tasks, add a holding reply for the affected segment, and share a simple workaround script. Momentum reduces fear. Then return to the evidence, refine the root cause, and adjust the plan with a clear head.

CX Metric Incident Response: Root-Cause Playbook for Conversation Spikes, End To End Workflow

A good runbook makes incidents boring. Detect with cohort-aware rules, triage with pivot queries, validate with quotes, then coordinate a fix. Keep the steps simple, clear, and repeatable. Add owners and gates so anyone can run the play at 2 a.m. without guesswork.

Detect Spikes With Cohort-Aware Rules

Static thresholds fail at odd hours. Use relative baselines like volume or negative sentiment above 150 percent of the same weekday and hour, for two consecutive windows. Blend rule types to avoid single metric mistakes. Combine volume by channel, negative sentiment ratio, and churn risk density. Start sensitive, apply noise filters, then tune.

If you want rigor, borrow from security. The [NIST Incident Handling Guide] explains stages and governance well. You do not need every control. You do need a clear trigger, a single owner, and a simple pre-validated query set.

Triage in Minutes With Pivot Queries

Speed comes from good defaults. You should be able to answer where and who in under ten minutes. Start broad, then tighten the scope. The goal is a defensible picture now, not a perfect one tomorrow. You can refine once the room is calm.

Run this first-pass sequence:

- Filter by date range and channel to isolate the spike window

- Pivot Sentiment by Driver or Canonical Tag to find concentration

- Slice by plan tier or segment to see who is most affected

- Check churn risk density within the affected cohorts

- Click counts to open tickets and scan ten transcripts for quotes

Validate, Map Cause, Coordinate Response

Read ten conversations from the dominant driver. Confirm the story with quotes. If mappings are off, group raw tags under the correct canonical tags. Map the driver to a label leaders trust, like Billing, Account Access, or Performance. Then open an incident channel, tag owners in CX, product, engineering, and comms, and agree on near term mitigations while the deeper fix is scoped.

I like to keep the runbook to one page at first. Thresholds, the five queries above, sampling rules for validation, the incident channel, roles, and a short postmortem template. Clarity beats complexity when pressure is high.

How Revelir AI Operationalizes CX Metric Incident Response

Tools should make this workflow feel routine. Revelir AI processes 100 percent of conversations, applies AI metrics, and preserves traceability to the exact quotes behind every insight. You filter, pivot, and click into tickets in minutes. That speed cuts detection and acknowledgment time and reduces the risk of false escalation.

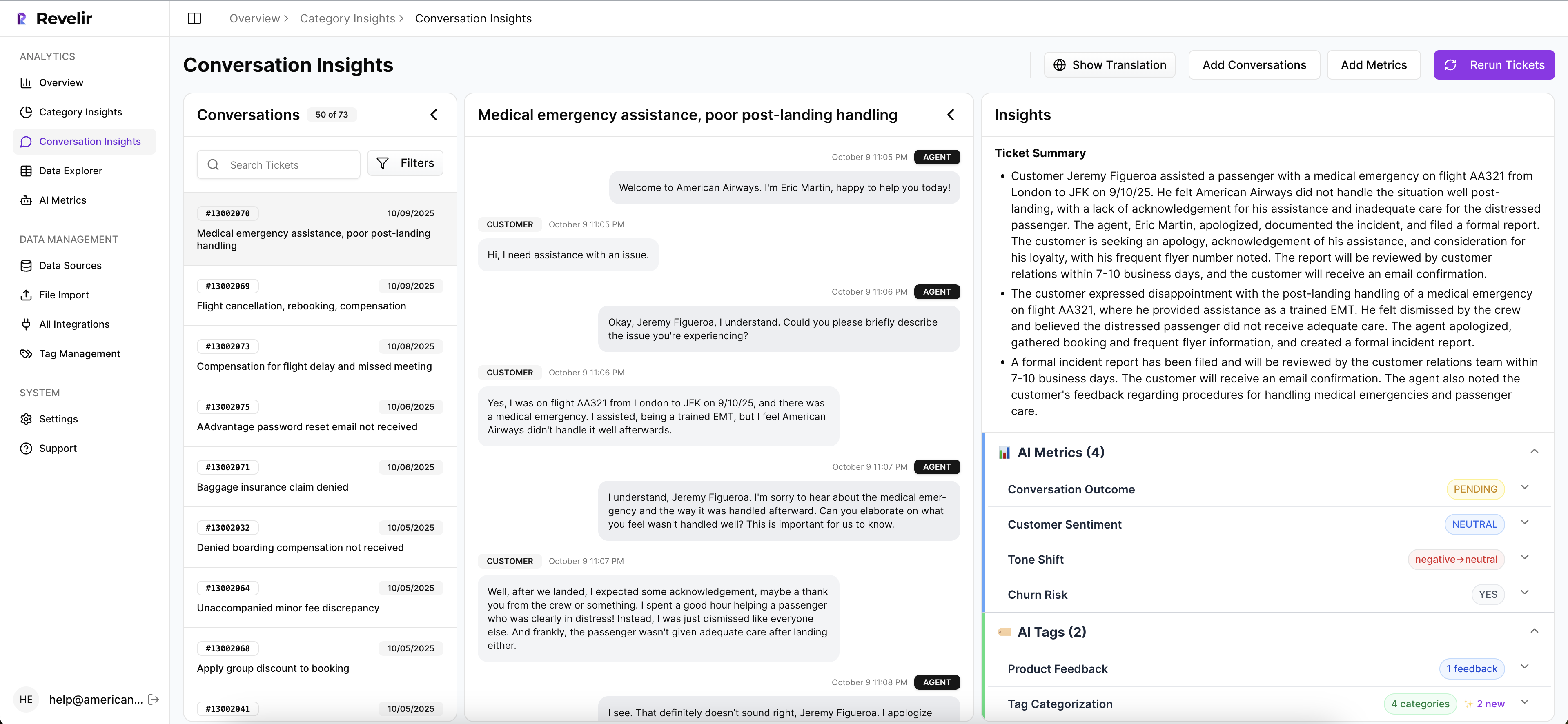

Full Coverage and Traceable Drill Downs

Revelir AI reviews every conversation and assigns metrics like Sentiment, Churn Risk, and Customer Effort, plus raw tags, canonical tags, and drivers. Every aggregate links to the tickets and quotes behind it in Conversation Insights. You stop sampling and start verifying, which moves debate to decisions. In practice, the MTTD gap shrinks because validation takes minutes.

When executives ask for proof, you click through. The quotes are right there. In my experience, this single change heals a lot of trust issues that slow product decisions.

Faster Pivots in Data Explorer

With Revelir AI, your first ten minutes look different. You filter by date range, channel, segment, and metric fields in Data Explorer, then run grouped analyses in Analyze Data to see where the spike concentrates. One click opens representative tickets to confirm patterns. What used to take an afternoon in spreadsheets becomes a tight loop: a quick pivot, a transcript scan, a clear call.

The shift is not subtle. It is the difference between containment today and cleanup next week.

Align Fixes With Custom Metrics and Drivers

Revelir supports a hybrid tagging system and custom AI Metrics that match your language. You map raw tags to canonical tags, roll them into drivers, and quantify which drivers push negative sentiment or churn risk. Product gets a fix list tied to evidence. CX gets messaging that addresses the real issue. Start by uploading a CSV or connecting Zendesk so you can see it on your data right away.

Ten-minute pivots and traceable metrics. That is what Revelir delivers. See How Revelir AI Works

Before you wrap, consider a small pilot. Upload last month’s tickets, run the five queries, and annotate one spike. You will feel the difference in a single review. If it helps, we can set this up together. Learn More

Conclusion

Incident thinking for CX is simple. Define states and owners, prove every claim with quotes, and stabilize language with tags and drivers. The rest is rhythm. Detect, triage, validate, fix, and annotate. Do this well once, and you will never go back to sampling or score-only debates. The payoff is not a prettier dashboard. It is fewer wrong fixes, lower risk, and faster relief for the customers who felt the spike first.

Frequently Asked Questions

How do I analyze conversation spikes using Revelir AI?

To analyze conversation spikes with Revelir AI, start by accessing the Data Explorer. 1) Use filters to isolate tickets from the spike period. 2) Apply the 'Sentiment' filter to focus on negative interactions. 3) Click 'Analyze Data' and select 'Group By' to categorize results by drivers or canonical tags. This will help you identify the main issues contributing to the spike, allowing your team to address them effectively.

What if I need to validate insights from my CX data?

If you need to validate insights, you can use the Conversation Insights feature in Revelir AI. After running your analysis, click on any metric number to see the underlying tickets. Review the full transcripts and AI-generated summaries to ensure the insights align with the actual conversations. This step helps confirm that your findings are accurate and actionable.

Can I customize metrics in Revelir AI for my business needs?

Yes, you can customize metrics in Revelir AI to fit your specific business language. To do this, define your custom AI metrics such as 'Upsell Opportunity' or 'Reason for Churn'. Once set up, Revelir will apply these metrics consistently across your conversations, allowing you to generate insights that are directly relevant to your operations and decision-making.

When should I use full coverage instead of sampling?

You should use full coverage when analyzing customer support conversations, especially during spikes in ticket volume. Sampling can lead to missing critical signals and biases in your data. With Revelir AI, you can process 100% of your tickets, ensuring that every conversation is analyzed. This approach provides a comprehensive view of customer sentiment and issues, allowing for more informed decision-making.

Why does my team need evidence-backed metrics?

Your team needs evidence-backed metrics to make informed decisions based on real customer conversations. Revelir AI transforms unstructured support tickets into structured metrics, linking insights back to specific quotes. This traceability builds trust among stakeholders and helps prioritize product improvements or customer experience initiatives by showing exactly what issues need to be addressed.