You can convert customer feedback into PRD-ready user stories with LLMs, but only if you treat stories like data, not prose. That means a strict template, confidence scores you can defend, and quotes linked to IDs you can show in the room. It sounds rigid. It frees you up. You get fewer arguments and cleaner handoffs.

We have a simple rule when we set this up with teams. If a field is empty or an acceptance criterion lacks evidence, it is not ready. No exceptions. The pipeline extracts the story, you review low-confidence items, and every decision traces back to the exact conversation. You move from digging to deciding. That is the point.

Key Takeaways:

- Replace summaries with a story schema and testable acceptance criteria tied to quote IDs

- Add confidence scoring and require rationale so PMs know what to review first

- Link every criterion to evidence, capped at three quotes, with conversation IDs and timestamps

- Prioritize by impact times confidence using drivers, sentiment, effort, and churn risk

- Instrument the pipeline end to end and watch rejection and drift trends

- Use Revelir AI to get 100 percent coverage, drivers, and traceable quotes that feed your LLM job

Stop Shipping Summaries, Convert Customer Feedback Into Implementable Stories

Summaries hide edge cases and delay engineering. A PRD-ready story is a structured object with persona, problem, outcome, constraints, and acceptance criteria you can test. The LLM should fill labeled fields only, with each criterion linked to a quote ID. That is how you avoid rework and ship safer.

Why summaries break engineering handoffs

Engineers do not build from vibes. They build from clear inputs. Summaries compress nuance and bury the exact steps the customer took, so defects slip through. When you force the model to output discrete fields, you surface missing parts fast. You also make it easy to validate against the source text.

Most teams think a clean paragraph is “good enough.” It is not. A fielded story lets you add validators, run similarity checks against quotes, and spot contradictions. You can even reject early when persona, driver, and evidence do not align. Clarity replaces debate. Rework drops.

Define PRD-ready user story fields up front

Start with the object. A PRD-ready story includes persona, problem, desired outcome, constraints, non-goals, driver and canonical tag, and acceptance criteria written as checks. Add a confidence score with justification and links to evidence quotes by ID and timestamp. If any field is empty, the story is not ready.

Locking this definition is how you align PM, design, and engineering. It also becomes your review gate. You can automate checks for nulls, enforce label names, and block merge on missing evidence. The model is allowed to say “unknown,” but only with a reason. That honesty saves you later.

Template the acceptance criteria, not the prose

The LLM should produce acceptance criteria as a short checklist, not a paragraph. Each line should be testable. Tie every line to one or more quotes with IDs. That linkage prevents the common mistake of writing criteria that feel right but map to nothing real. It also speeds QA.

We have seen teams add a tiny “rationale” note per criterion. One sentence. Why does this quote support this check. It sounds small. It kills ambiguity. During review, you reject anything without strong linkage or a thin rationale. The model learns quickly when you feed corrected examples back in.

Traceability, Not Just Text: Convert Customer Feedback Into Auditable Requirements

Auditability means every claim in the story traces to a conversation you can open. Build fields for quote ID, timestamp, and driver, store raw quote text, and keep a permalink back to the ticket. When someone asks why, you click once. Trust rises and meetings end faster.

Design evidence keys that survive scrutiny

You want to survive the hard question. “Show me where this came from.” That is why you store a stable conversation ID, a timestamp, and the driver or canonical tag next to each criterion. You also keep the raw quote text in the record so you do not rely on memory or screenshots.

It is usually not the big items that get challenged. It is the small ones. A subtle onboarding step. A quiet edge case. When your evidence keys open the exact conversation in seconds, the room stops arguing about whether the problem is real and starts discussing the fix.

Attach quotes at the criterion level without noise

Linking quotes at the story level is too coarse. Bundle them at the criterion level so each check has its own proof. Cap it at three quotes to avoid noise. Prefer quotes that show steps, edge cases, or exact wording customers use. That is where bugs hide.

Add a short rationale field. One sentence on why the quote supports the criterion. During review, reject criteria without strong linkage or with generic frustration quotes. This is the quality bar that prevents drift. It also trains the model when you loop corrected items back as few-shot examples.

The Real Cost Of Manual Triage And Why PMs Fail To Convert Customer Feedback Into Backlog Items

Sampling wastes time and still misses patterns. If you review 10 percent of 1,000 tickets at three minutes each, that is five hours for a partial view. Review 100 percent by hand and you burn 50 hours. Meanwhile, vague specs cause rework. The cost is real and compounding.

The time math that stalls your roadmap

Let’s do the math. Five hours weekly on sampling. Another three hours on cross-team coordination. Then a day lost rewriting specs because acceptance criteria were fuzzy. You have not shipped anything yet. Multiply this by a quarter and you lose sprints to overhead.

Now compare that to an automated extraction pass plus targeted review. The model fills your JSON template from the conversation, flags low confidence, and routes those to PMs. You review where risk is highest and stop reading the same complaints twice. Fewer meetings. Fewer late pivots.

Bias and error patterns you only catch late

Sampling overweights loud anecdotes and ignores quiet patterns. Manual tag variance introduces drift. Without drivers and evidence, stories detach from real problems, so you ship the wrong fix. Track defect escapes tied to unclear criteria, reopened tickets, and escalations. Those are costs finance will not ignore.

The fix is not more summaries. It is better structure. Use drivers to separate two similar issues you might have merged. Tie criteria to quotes so the same enterprise complaint does not hijack your roadmap without volume. This is how you reduce risk before it becomes churn.

According to an ACM study on user story quality, structured evaluation against clear heuristics leads to higher human ratings, especially when criteria are specific and testable. That mirrors what teams see in practice when they stop shipping summaries.

Late Nights And Untrusted Specs: What The Old Way Feels Like

Specs written from summaries invite pushback and late-night rewrites. You present a recap, an exec asks for proof, and the room stalls. Without traceability to quotes and tickets, priorities slip. Engineering loses faith in the ask. Evidence fixes that. Structure makes it fast.

When the room asks for proof

You know this moment. You share a trend. Someone says, “Show me an example.” If you cannot click into the exact conversation behind the metric, the room shifts to doubt. You start defending the number instead of solving the problem. That is a costly detour.

Make evidence visible by default. Every metric and story field should link to the underlying quotes. With traceability, you stop arguing about whether the pattern is real. You start talking about rollout plans and edge cases. The energy changes. Decisions get made.

You versus the queue at 11 pm

You read tickets at 11 pm trying to spot patterns by hand. It is exhausting and unreliable. The better pattern is simple. Let the system extract first. You review exceptions. Quotes carry the narrative. Your time moves from digging to deciding. Throughput climbs. Morale recovers.

We have seen teams breathe again once they stop copying text into docs. You still use judgment. You just apply it where it counts. And when you do have to defend a call, the quote is right there. No hunting. No guessing.

A Reproducible Pipeline To Convert Customer Feedback Into PRD-Ready User Stories

A reliable pipeline uses strict templates, controlled prompts, few-shot examples, confidence scoring, and human review on low-confidence items. It ranks by impact times confidence and monitors drift. The goal is reproducible outputs and auditable decisions, not clever prose from a single pass.

Prompt and template design that stays reproducible

Lock the schema before you prompt. Instruct the model to fill exact field labels only. Include two or three few-shot examples that show clear personas, tricky edge cases, and the right acceptance format. Add token caps per field to prevent rambling. Determinism beats style here.

Then add guardrails. Require the model to quote only from provided text and to mark uncertain fields as null with a reason. You can validate outputs by checking label presence, type, and length. Research on controllable structure shows this improves reliability in generation tasks, as noted in the [ACL 2025 findings on controllable text generation].

To implement the prompt pattern:

- Define the JSON template and allowed values up front

- Provide brief, diverse few-shots that match your schema

- Instruct quote-only extraction with nulls on uncertainty

- Enforce max tokens per field and exact label spelling

Human feedback that teaches the model

Sample outputs by confidence bands. Review low and medium items first. Correct fields and log why they failed. Feed those fixes back as new few-shot examples. Track which fields miss most and tighten instructions or add validators. Your review rate should fall week over week.

You do not need to review everything forever. Focus on hotspots. If persona alignment keeps failing, add a pre-check or stronger few-shot. If acceptance criteria sprawl, lower token caps and show a tight example. Responsible pipelines favor auditability and feedback loops, just like the arXiv work on operational LLM pipelines argues.

Prioritize and batch by impact times confidence

Use impact proxies you already trust. Volume by driver, density of negative sentiment, churn risk flags, and segment value. Multiply by confidence to rank items. Batch low-variance stories for quick review and escalate high-impact, low-confidence items straight to PMs.

This ordering reduces risk and waste. You avoid spending a day on a low-volume complaint with shaky evidence. You also unlock fast wins where confidence is high and scope is clear. Simple math. Better focus.

Monitoring the pipeline over time

Instrument the whole flow. Measure conversion time from ticket to PRD-ready item, triage reduction, story rejection rate, and post-release defect escapes. Trend by driver and persona. Review example pairs monthly to catch drift early.

When confidence dips or rejections spike, revisit prompts, templates, and sampling rules. Publish a small dashboard leadership trusts. Teams that do this rarely get surprised in prioritization meetings, a pattern echoed in evaluation research like the [ACL findings on reliability].

Ready to turn feedback into PRD-ready stories? Revelir AI makes it simple

How Revelir AI Powers Evidence-Backed Story Creation Without Extra Overhead

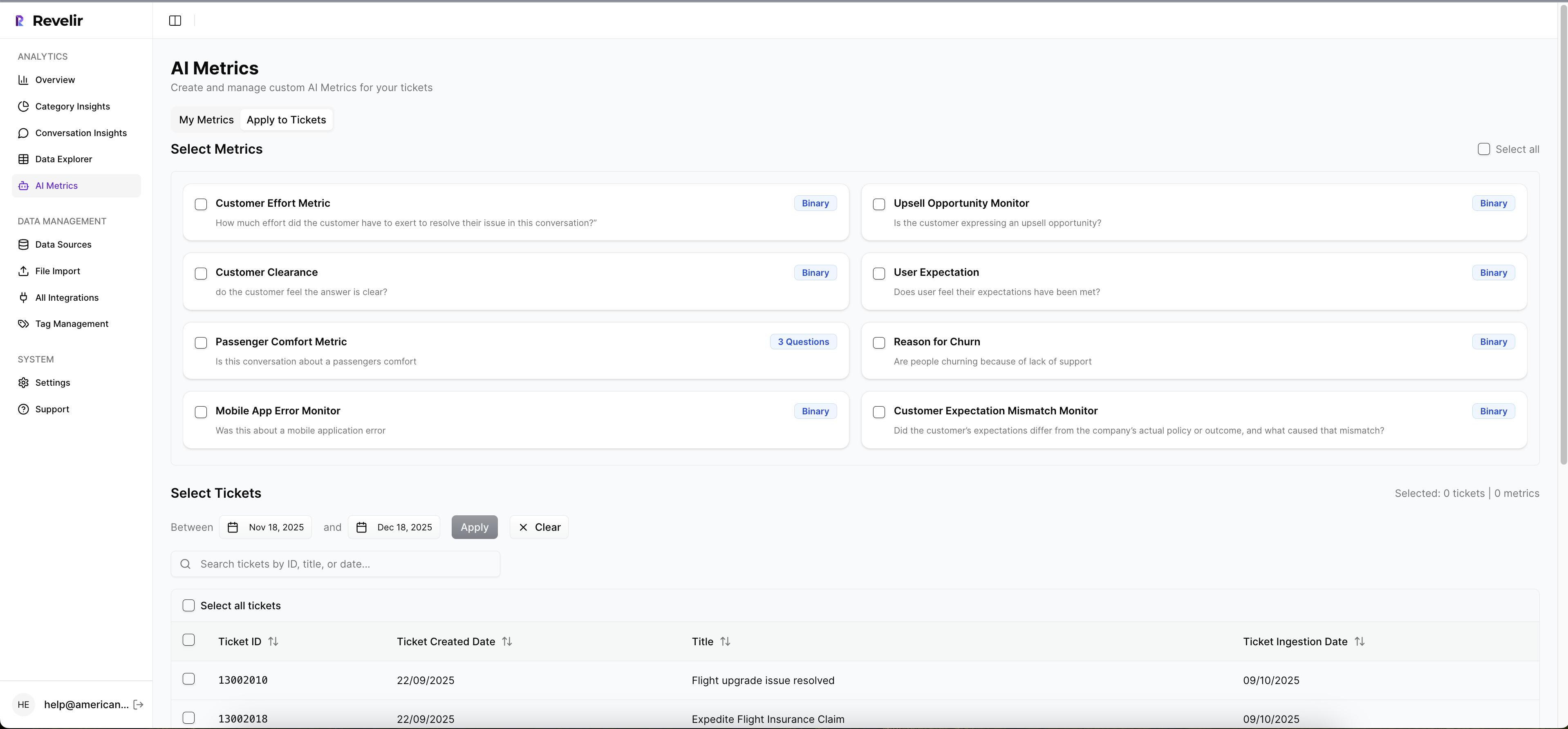

Revelir AI turns 100 percent of your support conversations into structured metrics, drivers, and quotes you can trace. You pivot to the highest-impact issues in minutes, click into Conversation Insights to pull exact quotes, and feed those into your LLM template. No agent workflow changes. Less risk, fewer misses.

Evidence at your fingertips with Data Explorer

Revelir AI processes all tickets automatically and assigns AI Metrics like Sentiment, Churn Risk, and Customer Effort. In Data Explorer, you slice by driver, canonical tag, or segment to find patterns fast. Then you open Conversation Insights to grab the exact quotes and timestamps behind the metric.

This is the backbone for your story pipeline. The evidence sits next to the metric. You do not chase screenshots or ask someone to run another export. You copy the quote, the conversation ID, and the timestamp straight into your story fields. Auditability becomes the default, not a favor.

From evidence to backlog with export paths your tools accept

You can export structured data by API or CSV from Revelir AI. Use that to feed your story extraction job and then push PRD-ready items into your backlog tool using the automations you already have. You keep your stack. You reduce manual triage. You preserve links back to the ticket for rework prevention.

The time you save shows up immediately. Remember the 5 to 50 hour review math. Full-coverage processing plus targeted review replaces it. And because each story carries quote IDs, reopened tickets drop. The cost of vague acceptance criteria goes down.

Close the loop with drivers and metrics

Drivers, sentiment, churn risk, and effort become your prioritization inputs before you build and your validation signals after you ship. Revelir AI keeps the evidence chain intact, so PMs can trace a story back to the exact quotes that justified it and prove whether the fix reduced the driver’s negative signal.

In practice, this looks like:

- Full-coverage processing that eliminates sampling bias

- Analyze Data for grouped insights you can pivot in seconds

- Conversation Insights for verifiable quotes and context

- API and CSV export for smooth handoffs into your LLM and backlog

That is how Revelir AI ties back to the hidden costs we outlined earlier. Less time burned on manual triage, fewer misses from sampling, and fewer late-night rewrites when specs are challenged.

Stop chasing approvals and start publishing decisions backed by evidence. Learn More

Conclusion

If you want fewer arguments and faster handoffs, stop shipping summaries. Define the story as data, insist on evidence at the criterion level, and let confidence guide review. Use drivers and metrics to rank impact, then monitor drift. The mechanics are simple. The discipline is the win. Revelir AI supplies the coverage, structure, and traceability so your LLM pipeline runs on proof, not hope.

Frequently Asked Questions

How do I convert customer feedback into user stories?

To convert customer feedback into user stories, start by using a strict template that includes a persona, problem, outcome, constraints, and acceptance criteria. Make sure each acceptance criterion is linked to a quote ID from the customer feedback. This ensures you have evidence to support your decisions. You can use Revelir AI to automate this process, as it helps structure the feedback into actionable user stories while maintaining traceability to the original conversations.

What if my user stories lack evidence?

If your user stories lack evidence, you should revisit the original customer feedback and ensure that each story is tied to specific quotes or conversation IDs. Revelir AI can assist by extracting relevant quotes and linking them to your user stories, ensuring that every claim is backed by actual customer input. This not only strengthens your user stories but also provides a solid foundation for discussions with stakeholders.

Can I prioritize user stories based on customer sentiment?

Yes, you can prioritize user stories based on customer sentiment by analyzing the feedback associated with each story. Use Revelir AI to assess sentiment levels linked to your user stories. By identifying which stories are tied to negative sentiment or high churn risk, you can focus on addressing the most pressing issues first. This approach helps ensure that your product development efforts align with customer needs and pain points.

When should I review low-confidence user stories?

You should review low-confidence user stories as soon as they are identified in the pipeline. These stories may lack sufficient evidence or clarity, which can lead to misunderstandings during development. By using Revelir AI, you can quickly trace back to the original conversations that generated these stories and gather more context. This ensures that your team is working with the best possible information before making decisions.

Why does my team need structured user stories?

Structured user stories are crucial because they provide clear, actionable insights that can be easily communicated across teams. They help avoid ambiguity and ensure that engineers have precise inputs to work from. By utilizing Revelir AI, you can transform raw customer feedback into structured, PRD-ready user stories that include all necessary details, which ultimately leads to fewer arguments and cleaner handoffs during development.