Most teams treat sentiment like a traffic light and hope it maps to churn. Green feels safe, red feels urgent, and everything in between gets debated. That shortcut is costly. If you want to prevent real attrition, you have to calibrate sentiment for churn and build a reliability layer around it. No more guessing. No more yelling at charts.

Here’s the simple framing I use with CX and product leaders. Labels are a start, not a decision. The decision lives in calibrated probabilities, clean labels, and thresholds that match the cost of getting it wrong. Then you need evidence on tap so nobody can question the call. Calibrate Sentiment for Churn: Build a Reliability Layer, and you’ll stop chasing noise.

Ready to get started? Learn More.

Key Takeaways:

- Sentiment alone is a weak proxy for churn risk; calibrate scores to observed outcomes by segment and channel.

- Build a churn-labeled evaluation set with strict label hygiene so calibration curves reflect reality.

- Optimize thresholds against cost, not accuracy. Precision and recall should reflect outreach capacity and renewal risk.

- Measure calibration quality with reliability diagrams, ECE, MCE, Brier score, and lift, then check stability by segment.

- Tie every metric to real conversations. Evidence ends debates and accelerates decisions.

- Operationalize the workflow with filters, grouped analysis, drill downs, and exports. Keep a fast loop between metric and quote.

Sentiment Alone Does Not Prioritize Retention Work

Sentiment is descriptive, not prescriptive. Treating positive, neutral, and negative as action signals invites mistakes when churn risk hides inside context, segment, and timing. The fix is simple to say and harder to do: calibrate model outputs to observed churn, then set thresholds that match business costs. One example: a “negative” billing thread that never churns.

Why Categorical Labels Mislead Prioritization

Categorical labels collapse nuance. “Negative” caused by a minor delay is not the same as “negative” from repeated payment failures near renewal. Without calibration, you’ll overreact to loud but low-risk cases and miss high-risk but calm ones. It’s usually the quiet, unresolved threads that predict churn. Same thing with enterprise accounts that never vent but still leave.

What you actually need is a probability that reflects churn likelihood for this segment, in this channel, at this time in the lifecycle. Then you set a threshold that matches your cost curve. High-touch accounts may warrant high precision, while self-serve segments need higher recall. Without that split, you’ll waste time, lose trust, and risk missing the real problem.

If you want a quick market view of churn modeling programs, the overview from Braze on churn prediction is a decent primer. Use it to align teams on vocabulary, then move fast to your own calibration work.

What Is A Reliability Layer And Why It Matters?

A reliability layer turns raw scores into well calibrated probabilities, then enforces segment-aware thresholds you can defend. It also preserves traceability, so when someone asks “why did we flag this,” you can point to a probability, the driver behind it, and the exact quotes.

Without this layer, teams confuse labels with decisions. They respond to “negative” because it’s easy, not because it’s predictive. A reliability layer forces discipline. You calibrate using outcomes, you threshold by cost, and you keep proof on screen. When the conversation shifts to “is this reliable,” you show your curves and your evidence. That stops the debate.

The Real Gap Is Calibration And Label Hygiene

Most teams don’t fail at modeling. They fail at ground truth. If your labels mix sentiment with churn intent, calibration curves will lie. The fastest fix is a small, clean evaluation set labeled for churn outcomes, not mood. Then you guard against drift with a hygiene checklist and strict review. That’s what makes the reliability layer reliable.

Build A Churn-Labeled Evaluation Set

Start with a holdout set built for one purpose: mapping conversations to churn outcomes. Define what “churn risk” means in your world before you start. Include pre-renewal windows, formal cancellation mentions, frustration cues that preceded churn, and intent signals tied to downgrades.

Double-review edge cases, record adjudication notes, and link each label to the conversation. If you can’t click from a metric to an example, you’ll lose the room later. The goal is simple. Labels that reflect the outcome you care about, and evidence you can show. When someone asks for proof, you’re ready.

What Does Label Hygiene Look Like?

Create a checklist and stick to it. Remove duplicates and near duplicates, so you don’t inflate confidence. Balance by segment and channel, because calibration often drifts in chat versus email. Capture time windows that matter, like 60 days pre-renewal. Include the metadata you’ll use for thresholds later, such as tier, product, and region.

Document ambiguous cases. You’ll revisit them when you check for drift. Hygiene is boring, but it prevents the mistake that hurts most: trusting curves trained on noisy labels. If you need a quick refresher on sentiment pitfalls and setup, this guide from AlternaCX on sentiment analysis is a helpful baseline.

Uncalibrated Scores Create Costly False Alarms

Uncalibrated scores waste time and miss risk. If the model says “high” and reality says “rare,” you’ll burn trust fast. You can stop that by quantifying cost, then tuning thresholds to minimize it. Don’t argue accuracy in abstract. Put a price on false positives and false negatives, then optimize against the total cost. The math is sobering, and clarifying.

Quantify The Cost Of Bad Thresholds

Let’s pretend your current cutoff flags 500 conversations each month. After review, only 15 percent reflect real churn risk. That’s 425 false alarms. If each false alarm costs 10 minutes in triage and 5 minutes of unneeded outreach, you’re losing more than 100 hours per month. And you annoy healthy customers who didn’t need a check-in.

Flip it around. Drop the threshold too far and you’ll miss the risky 10 percent that quietly churn. That’s the revenue hit nobody wants to explain. So build a simple cost model per false positive and per false negative. Then pick thresholds that minimize expected cost for each segment. It turns a loud argument into a clear decision.

Metrics That Reveal Calibration Quality

Use reliability diagrams to compare predicted risk to observed outcomes. Track Expected Calibration Error and Maximum Calibration Error. Add Brier score to capture both calibration and sharpness. Then look at business metrics like lift and precision at N, because leaders care about results. Check stability by segment first, not just global averages, or you’ll miss hidden failure modes.

If you want a deeper dive on modern calibration, the ACM research on calibration has practical guidance. The truth most teams ignore is simple. A curve that looks fine overall can fail badly for enterprise accounts or a specific channel. Segment first, then tune.

Feel The Pain Teams Live With Today

This is where it hurts. Your team sprints after every negative label, outreach lands too early, and customers feel pressured. CSMs complain about noisy alerts. Analysts rewrite queries weekly. Leaders start to doubt the signal. That erosion of trust is expensive. Calibrated probabilities with traceability calm the noise and focus action where it prevents churn.

How False Positives Burn Time And Trust

Noisy thresholds feel productive because the queue moves. But the cost piles up. Analysts triage alerts that go nowhere. CSMs get dragged into follow-ups that create friction. Healthy customers wonder why you keep checking in. It’s a waste of hours and goodwill. The fix isn’t louder alerts. It’s better thresholds, tied to cost, checked with evidence.

We’ve seen teams cut the noise by focusing on segment-aware precision where it matters most. Enterprise gets strict thresholds with examples attached. Self-serve gets broader coverage and cheaper outreach. Nobody’s checking that split at first, which is why the alerts feel wrong. Calibrate, check the evidence, then adjust. You’ll feel the difference in a week.

If you want another angle on the link between sentiment methods and churn, this write-up from Supportbench on sentiment and churn is a useful context piece.

Why Stakeholders Push Back Without Evidence

When a VP asks for proof and you can’t click from a chart to conversations, the room stalls. People ignore the metric and start trading anecdotes. That’s the meeting where momentum dies. Transparent traceability fixes it. If every flagged segment links to representative quotes, alignment rises, and decisions stick. Evidence ends the debate.

We’re not asking for perfect models. We’re asking for a reliable threshold and examples on demand. That keeps the comment you dread from happening: “Show me where this came from.” When you can answer in seconds, the conversation moves to action. Without it, you’ll lose time, then budget, then patience.

Build The Reliability Layer For Churn Decisions

A reliability layer is a workflow. You build a clean evaluation set, choose interpretable features, calibrate, and set segment-aware thresholds. You validate with diagrams and real conversations, then monitor drift and adjust. Keep it simple, repeatable, and tied to outcomes. That’s how you move from labels to decisions you can defend.

Create The Evaluation Set And Features That Signal Risk

Use your churn-labeled holdout set as the single source of truth. Add conversational features that track with risk: turn-level intensity, unresolved loops, agent response patterns, time to first reply, and driver tags like Billing or Account Access. Keep features interpretable where you can, so you can explain why a case was flagged.

Split by tier, channel, product, and region. Decisions don’t happen at a global average. They happen in slices. A feature that predicts risk in chat might be weak in email. If you lump them together, you’ll miss it. Measure per slice first, then roll up.

How Do You Calibrate Scores Correctly?

Pick a method that matches your model’s behavior. If ranking is sound, Platt scaling can map scores to probabilities. If monotonicity is messy, isotonic regression helps. If you’re working with neural outputs that look overconfident, try temperature scaling. Validate with reliability diagrams and ECE per segment, then hold back a test set for acceptance.

If curves drift later, refresh the window and re-verify. This isn’t a one-time event. It’s maintenance. What works in Q1 may be wrong in Q3 if a policy changes or a product launches. Keep the loop tight. Calibrate, measure, check examples, adjust.

Set Segment Aware Thresholds You Can Defend

Don’t ship a single global cutoff. Set thresholds by tier, channel, and product using cost curves and operational capacity. For enterprise, you may want higher precision to avoid awkward outreach. For self-serve, you might accept lower precision to catch more risk. Document the rationale and include example conversations near the boundary.

That last part matters. Without examples, thresholds turn into opinions. With examples, they turn into decisions the room can live with. You’ll still debate edge cases. You just won’t get stuck.

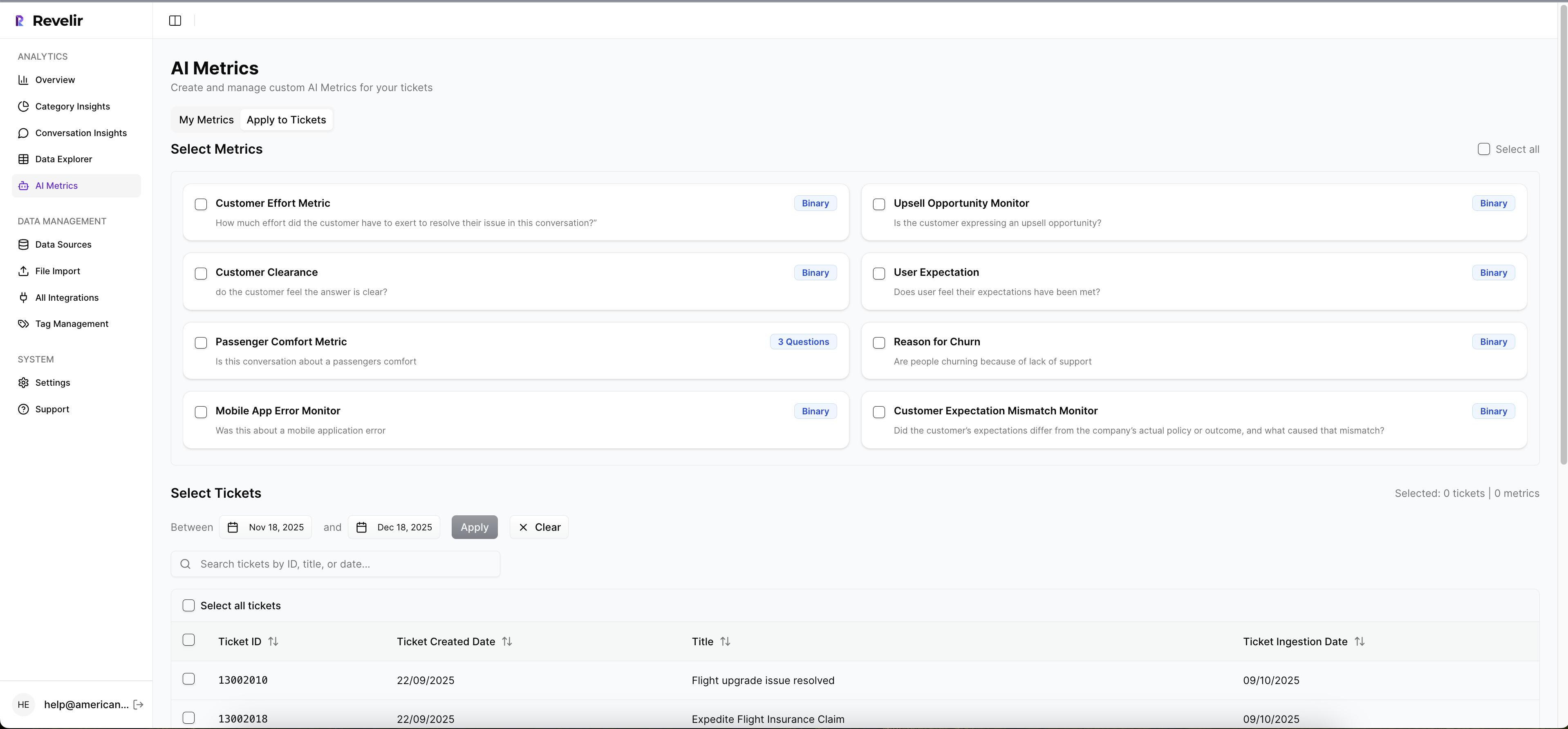

How Revelir AI Operationalizes Calibrated Churn Risk

You need two things to run this play daily: a fast way to slice tickets by risk signal and segment, and a fast way to click into the exact conversations behind any metric. Revelir AI gives you both. You work in Data Explorer and Analyze Data, then drill into Conversation Insights for proof. When someone asks “why,” you show them.

Review Risk Signals And Evidence In One Place

With Revelir AI, Sentiment and Churn Risk appear as structured columns you can filter, group, and sort in Data Explorer. Add drivers, canonical tags, and segment metadata in the same view, then click into Conversation Insights to read the transcripts and AI summaries behind any number. That audit path keeps thresholds honest and decisions defensible.

Teams use this to run weekly reviews by segment. Filter to enterprise, group by drivers, then open the tickets behind “Billing” or “Account Access.” When a leader asks why a slice looks risky, you show the metric and the quotes in seconds. That’s the difference between a metric that gets ignored and one that moves a roadmap.

Cut Review Time With Filters, Grouping, And Exports

Revelir AI reduces manual triage by letting you filter on risk signals, group by driver or canonical tag, and click straight into representative examples. If your earlier analysis suggested hours lost to false positives, this flow compresses review to the tickets that matter. You answer “what’s driving risk for this segment” without exporting a CSV or writing a query.

When you want to measure calibration quality or run cost modeling, export metrics via API to your BI or notebook, then bring the conclusions back as saved views and operating thresholds. You keep the analysis where it belongs, and the day-to-day review simple.

Governance And Recalibration You Can Explain

Use saved views to standardize monthly checks by tier and channel. When performance drifts, re-run Analyze Data, inspect risk patterns by driver, and adjust operating thresholds with evidence on screen. Because every aggregate links to conversations, recalibration moves faster. You keep a clean narrative from metric to quote, which prevents the usual governance headache.

Revelir AI exists for this loop: from unstructured tickets to structured signals, from aggregate patterns to real examples. It’s auditable, it’s fast, and it keeps teams aligned. Ready to make the new approach real without adding headcount? Learn More

Conclusion

Sentiment isn’t a decision. It’s a clue. If you want churn prevention that holds up in the room, calibrate to outcomes, tune thresholds by segment and cost, then keep proof one click away. That’s the reliability layer. You’ll spot risk earlier, waste less time on noise, and earn trust because every number maps to a quote. Evidence wins. Always.

Frequently Asked Questions

How do I set up Revelir AI with my helpdesk?

To set up Revelir AI with your helpdesk, you can start by connecting your support platform, like Zendesk. Once connected, Revelir will automatically ingest historical tickets and ongoing updates, including full message transcripts and metadata. If you prefer, you can also upload CSV files of your support tickets. After the data is ingested, Revelir will apply its AI metrics and tagging system to transform your conversations into structured insights. This process typically takes just a few minutes, allowing you to access valuable metrics right away.

What if I want to analyze specific customer segments?

If you want to analyze specific customer segments, you can use Revelir's Data Explorer to filter your dataset. Start by applying filters based on customer attributes, such as plan type or region. You can also filter by sentiment or churn risk to focus on high-priority segments. Once you have your filtered view, use the 'Analyze Data' feature to group insights by relevant metrics like sentiment or churn risk. This will help you identify patterns and issues specific to those segments, enabling targeted actions for improvement.

Can I track changes in churn risk over time?

Yes, you can track changes in churn risk over time using Revelir AI. By leveraging the Analyze Data feature, you can group churn risk metrics by time periods, such as weekly or monthly. This allows you to see trends and fluctuations in churn risk across different customer segments. Additionally, you can drill down into specific tickets to understand the underlying reasons for any changes, ensuring you have the context needed to make informed decisions about customer retention strategies.

When should I use custom AI metrics in Revelir?

You should consider using custom AI metrics in Revelir when you want to capture specific business needs that standard metrics might not cover. For example, if your organization has unique drivers of churn or customer effort that aren't reflected in the default metrics, you can define custom metrics that align with your business language. This customization allows you to gain deeper insights into your support conversations and tailor your analysis to better inform product and CX decisions.

Why does sentiment alone not prioritize retention work?

Sentiment alone is often insufficient for prioritizing retention work because it doesn't provide the full context of customer interactions. Revelir AI helps address this by calibrating sentiment scores against observed outcomes and segmenting data by channel. This way, you can identify not just whether sentiment is positive or negative, but also understand the specific drivers behind that sentiment. By linking sentiment to real conversations and evidence, you can make more informed decisions about where to focus your retention efforts.